The output from the code below is:

pytorch answer = [1.14435797]

pytorch takes 12.410856008529663 s

my answer = [1.14435797]

my imlementation takes 1.566734790802002 s

So why is the pytorch forward pass 8x slower than numpy here?

import IPython as ipy

import numpy as np

import torch

import time

class Net(torch.nn.Module):

def __init__(self, input_size, layer_width):

super(Net, self).__init__()

self.input_size = input_size

output_size = 1

self.fc1 = torch.nn.Linear(input_size, layer_width)

self.fc2 = torch.nn.Linear(layer_width, layer_width)

self.fc3 = torch.nn.Linear(layer_width, output_size)

def forward(self, x):

x = torch.nn.functional.relu(self.fc1(x))

x = torch.nn.functional.relu(self.fc2(x))

x = torch.exp(self.fc3(x))

return x

def get_input_size(self):

return self.input_size

def main():

torch.set_default_dtype(torch.float64)

torch.set_grad_enabled(False)

input_size = 20

layer_width = 20

net = Net(input_size, layer_width)

N_evals = 100000

pytorch_x = torch.ones(input_size)

pytorch_answer = net(pytorch_x).detach().numpy()

print("pytorch answer = " + str(pytorch_answer))

t0 = time.time()

pytorch_x = torch.ones(input_size)

for i in range(N_evals):

pytorch_answer = net(pytorch_x)

t1 = time.time()

print("pytorch takes " + str(t1 - t0) + " s")

x = pytorch_x.detach().numpy()

w1 = net.fc1.weight.detach().numpy()

w2 = net.fc2.weight.detach().numpy()

w3 = net.fc3.weight.detach().numpy()

b1 = net.fc1.bias.detach().numpy()

b2 = net.fc2.bias.detach().numpy()

b3 = net.fc3.bias.detach().numpy()

y = np.matmul(w1, x) + b1

y = y * (y > 0)

y = np.matmul(w2, y) + b2

y = y * (y > 0)

my_answer = np.exp(np.matmul(w3, y) + b3)

print("my answer = " + str(my_answer))

t0 = time.time()

x = np.ones(input_size)

for i in range(N_evals):

y = np.matmul(w1, x) + b1

y = y * (y > 0)

y = np.matmul(w2, y) + b2

y = y * (y > 0)

my_answer = np.exp(np.matmul(w3, y) + b3)

t1 = time.time()

print("my imlementation takes " + str(t1 - t0) + " s")

if __name__ == "__main__":

main()

Oli

May 17, 2019, 5:20am

2

Don’t know, but it would be fun to see which part takes time. Can you run this profiler on it? snakeviz

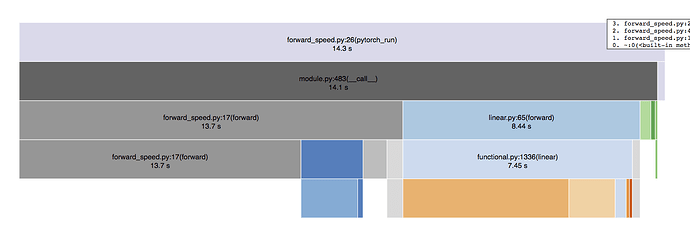

I ran the profiler as you suggested. First I made the code easier to profile by splitting the pytorch and my numpy runs. See below.

Not sure what exactly you expected. Nothing jumps out to me other than everything looks slow. The times 13.7 and 8.44 don’t add up to 14.1 s. Not sure what to make of that.

import IPython as ipy

import numpy as np

import torch

import time

class Net(torch.nn.Module):

def __init__(self, input_size, layer_width):

super(Net, self).__init__()

self.input_size = input_size

output_size = 1

self.fc1 = torch.nn.Linear(input_size, layer_width)

self.fc2 = torch.nn.Linear(layer_width, layer_width)

self.fc3 = torch.nn.Linear(layer_width, output_size)

def forward(self, x):

x = torch.nn.functional.relu(self.fc1(x))

x = torch.nn.functional.relu(self.fc2(x))

x = torch.exp(self.fc3(x))

return x

def get_input_size(self):

return self.input_size

def pytorch_run(net, input_size, N_evals):

t0 = time.time()

pytorch_x = torch.ones(input_size)

for i in range(N_evals):

pytorch_answer = net(pytorch_x)

t1 = time.time()

return t1 - t0

def my_run(w1, w2, w3, b1, b2, b3, input_size, N_evals):

t0 = time.time()

x = np.ones(input_size)

for i in range(N_evals):

y = np.matmul(w1, x) + b1

y = y * (y > 0)

y = np.matmul(w2, y) + b2

y = y * (y > 0)

my_answer = np.exp(np.matmul(w3, y) + b3)

t1 = time.time()

return t1 - t0

def main():

torch.set_default_dtype(torch.float32)

torch.set_grad_enabled(False)

input_size = 20

layer_width = 20

net = Net(input_size, layer_width)

N_evals = 100000

pytorch_x = torch.ones(input_size)

pytorch_answer = net(pytorch_x).detach().numpy()

print("pytorch answer = " + str(pytorch_answer))

pytorch_time = pytorch_run(net, input_size, N_evals)

print("pytorch takes " + str(pytorch_time) + " s")

x = pytorch_x.detach().numpy()

w1 = net.fc1.weight.detach().numpy()

w2 = net.fc2.weight.detach().numpy()

w3 = net.fc3.weight.detach().numpy()

b1 = net.fc1.bias.detach().numpy()

b2 = net.fc2.bias.detach().numpy()

b3 = net.fc3.bias.detach().numpy()

x = np.ones(input_size)

y = np.matmul(w1, x) + b1

y = y * (y > 0)

y = np.matmul(w2, y) + b2

y = y * (y > 0)

my_answer = np.exp(np.matmul(w3, y) + b3)

print("my answer = " + str(my_answer))

my_time = my_run(w1, w2, w3, b1, b2, b3, input_size, N_evals)

print("my imlementation takes " + str(my_time) + " s")

if __name__ == "__main__":

main()

You might see some overhead for tiny workloads in PyTorch.input_size and layer_width to 1000 gives:

pytorch answer = [1.0642374]

pytorch takes 16.20141328498721 s

my answer = [1.06423738]

my imlementation takes 150.2599041541107 s

I had to kill the run after a few minutes using 2000 for both variables, as numpy took too long.