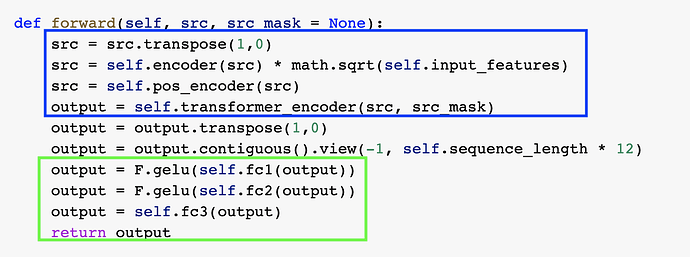

Everything in the second half (in the green box) is learning reliable weights but everything in the first half (blue box) is learning seemingly random weights. The backprop updates the weights and gradients of the first half, yet it is learning seemingly random weights that do not improve the training. The second half is learning correctly though.

It seems the issue is something to do with the transpose/view function somehow causing the grad_fn function to be messed up. What exactly is the issue and how can it be fixed?