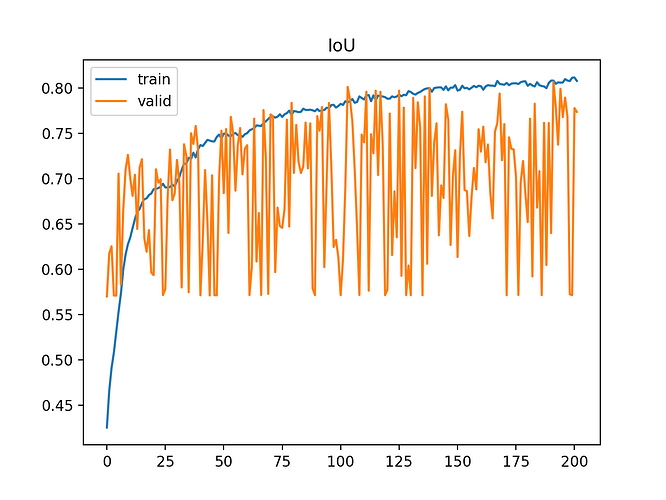

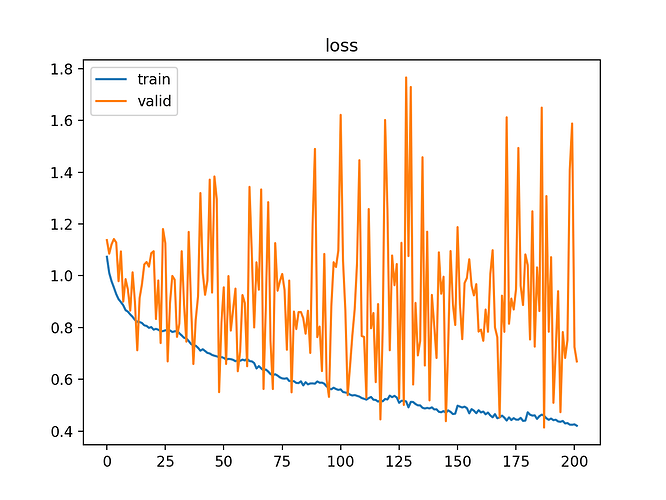

After training a network for a lot of epochs the loss and IoU is constantly changing over time (by jumping). Do you have any idea for fixing it ?

How many samples do you have in total (train and valid separately)? What is your batch size (for both train and valid if they are different)?

It looks like some batches are easier than others, it can happen more easily if you have small batch sizes and datasets where difficulty is very varying.

You could try to average those values a bit, see if there’s an increasing (IoU) or decreasing (loss) trend, but at first sight it looks like it’s not learning much…