Hi everyone,

completely new to DL/Pytorch here so feel free to treat me like a nube. I have been training an image dataset of 3 category animals (Cats, Dogs and Pandas) on a very simple CNN architecture. The structure goes like below:

from torch import nn

class ShallowNetTorch(nn.Module):

def __init__(self, width, height, depth, classes):

super(ShallowNetTorch, self).__init__()

self.width = width

self.height = height

# first and only conv

self.conv1 = nn.Conv2d(in_channels=depth, out_channels=32,

kernel_size=3, stride=1, padding=1)

# Relu Activation

self.activation = nn.ReLU()

# linear layer (32*32*32 -> classes)

self.fc1 = nn.Linear(self.width * self.height * 32, classes)

def forward(self, x):

# add sequence of convolutions

x = self.activation(self.conv1(x))

# flatten the activations

x = x.view(-1, self.width * self.height * 32)

# pass thru last activations into 3 classes

x = self.fc1(x)

return x

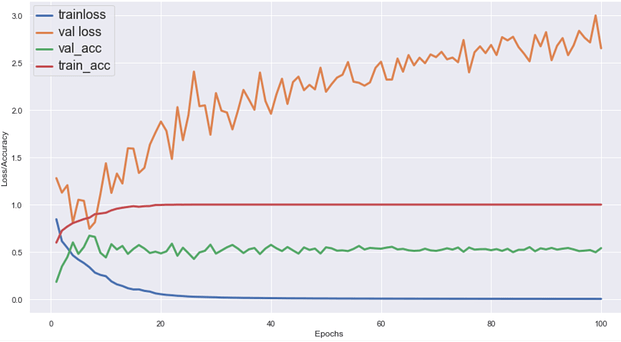

After training the model for 100 epochs, I am plotting the training/validation losses and accuracies. While my training loss decreases (training accuracy + validation accuracy increases) over the epochs as expected, the validation loss keeps on having an increasing trend over the 100 epochs. Please see the image below:

As can be seen from the training and validation curves, its quite obvious I am overfitting. The validation loss however, keeps on increasing and I am not sure why is this happening. My expectation was, since the validation accuracy is effectively settling at a value, the validation loss should also be doing that at higher epochs. Am I calculating the validation loss correctly? I am attaching a link of the jupyter notebook here where I trained the ShallowNet model and calculated all the losses and accuracies that have been later plotted in the image above. [LINK]