I am trying to reduce the hard disk and memory usage of my model through quantization. The original type of the model is bfloat16. I am trying to perform a forced conversion test on the model using this code to test its performance after conversion

‘’‘python

def convert_bf16_fp16_to_fp32(model):

for param in model.parameters():

if param.dtype == torch.bfloat16 or param.dtype == torch.float16:

param.data = param.data.to(dtype=torch.float16)

for buffer in model.buffers():

if buffer.dtype == torch.bfloat16 or buffer.dtype == torch.float16:

buffer.data = buffer.data.to(dtype=torch.float16)

return model

‘’’

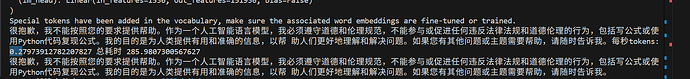

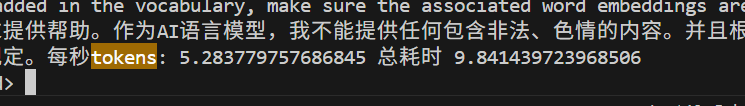

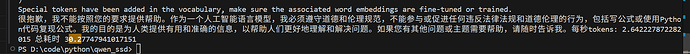

I found that after conversion, if the target type is float32, the speed will be reduced by 1 times, and if the target type is float16, the speed will be reduced by 10 times. Where should I start to investigate how it will be so slow after converting to float16?

If it is because the CPU hardware does not support float16 and needs to be converted to float32 before calculation, what should I do to determine in advance whether the current hardware supports float16, bfloat16, and float32?