Hello everyone on the forum

In my process of learning PyTorch quantization, I implemented convolution in two ways. Given quantized input and weight tensors, along with the scale and zero_point for the input, weight, and output tensors:

The first approach involved converting the quantized input and weight tensors back to floating-point numbers, performing 2D convolution using the floating-point function nn.Conv2d, and then quantizing the result back to integers.

The second approach directly used the quantized.Conv2d function to perform quantized convolution.

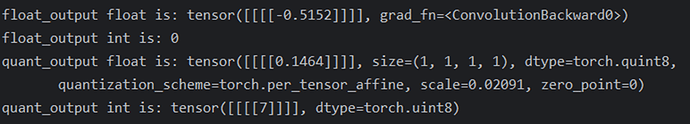

After comparing the results from both methods, I noticed a considerable discrepancy. What could be the reason for this?

import torch.nn as nn

import torch.quantization

# 量化参数设置 (示例值,根据实际需求修改)

input_scale = 0.012728

input_zero_point = 33

weight_scale = 0.00292

weight_zero_point = -11

activation_scale = 0.02091#0.02086#0.020389

activation_zero_point = 0

# 读取32x32输入张量 (假设CSV是32行32列)#

input_tensor = torch.tensor([[[[200.,200.,200.,200.,200.],

[200.,200.,200.,200.,200.],

[200.,200.,200.,200.,200.],

[200.,200.,200.,200.,200.],

[200.,200.,200.,200.,200.]]]],dtype=torch.float32)

# 读取5x5权重矩阵 (假设CSV是5行5列)

weight_matrix =torch.tensor( [[[[ 9, -32, 17, 3, -67],

[ -97, -41, 47, 84, -56],

[-128, -125, 11, 63, 54],

[ -15, -58, -31, 50, 48],

[ 23, -3, 64, -87, -91]]]],dtype=torch.int8)

bias = torch.tensor(0, dtype=torch.float32)

bias = bias.reshape(-1)

print(input_tensor)

print(weight_matrix)

#===========浮点模型计算=================

# 创建浮点模型

float_model = nn.Conv2d(

in_channels=1,

out_channels=1,

kernel_size=5,

stride=1,

padding=0,

bias=True

)

float_weight_matrix = weight_scale*(weight_matrix - weight_zero_point)

# 手动设置权重

with torch.no_grad():

float_model.weight = nn.Parameter(float_weight_matrix)

float_model.bias = nn.Parameter(bias)

float_input_tensor = input_scale*(input_tensor - input_zero_point)

print(float_model.weight)

print(float_input_tensor)

# 执行量化卷积计算

float_output = float_model(float_input_tensor)

q_output = torch.quantize_per_tensor(

float_output,

scale= activation_scale,

zero_point=activation_zero_point,

dtype=torch.quint8

)

q_output_int = q_output.int_repr().squeeze().numpy()

#=======================量化版本=========================

#直接使用量化Conv2d

quant_conv = torch.ao.nn.quantized.Conv2d(1, 1, 5, bias = False)

quant_conv.scale = activation_scale

quant_conv.zero_point = activation_zero_point

# 量化权重张量

quant_weight = torch.quantize_per_tensor(

float_weight_matrix,

scale=weight_scale,

zero_point=weight_zero_point,

dtype=torch.qint8

)

# 设置权重(使用浮点权重)

quant_conv.set_weight_bias(quant_weight,None )

# 量化输入张量

quant_input = torch.quantize_per_tensor(

float_input_tensor,

scale=input_scale,

zero_point=input_zero_point,

dtype=torch.quint8

)

print("Actual quantized weight:", quant_conv.weight)

print("Integer representation:", quant_conv.weight().int_repr())

# 执行量化卷积

quant_output = quant_conv(quant_input)

print("float_output float is:", float_output)

print("float_output int is:", q_output_int)

print("quant_output float is:",quant_output)

print("quant_output int is:",quant_output.int_repr())

print("compute done!")

the result is