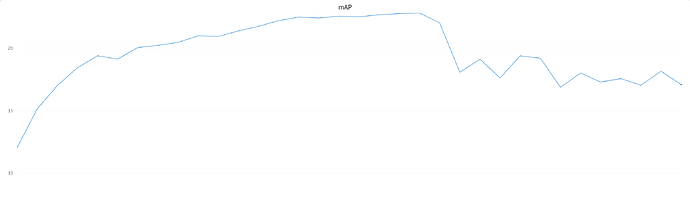

As shown in above learning curve, suddenly MAP drops at certain epoch.

Also I found that training loss is gradually increasing at this moment but no gradient exploding since I use Adam optimizer and grad cliping.

What makes this kind of situation happen? Does simple generalization tricks (i.e increasing weight decay) can be the solution?