Hi,

I wrote a custom def CrossEntropy() to remove the softmax in the torch.nn.CrossEntropy():

def CrossEntropy(self, output, target):

'''

input: softmaxted output

'''

loss = nn.NLLLoss().to(self.device)

return loss(torch.log(output), target)

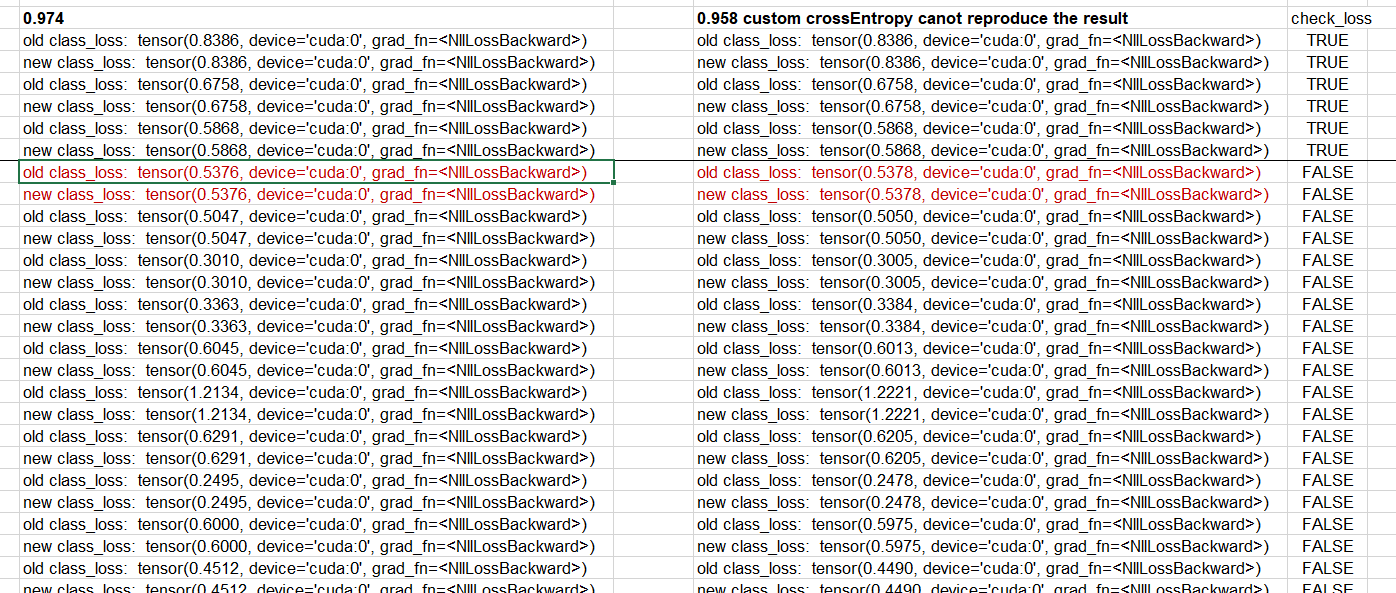

As I tested with a complete model training, it can successfully reproduce the result of the torch.nn.CrossEntropy():

(old class_loss: calculated by torch.nn.CrossEntropy(); new class_loss: calculated by custom def CrossEntropy():

class_loss = self.class_criterion(model_outputs, labels) # self.class_criterion = torch.nn.CrossEntropy()

print('old class_loss: ', self.class_criterion(model_outputs, labels))

print('new class_loss: ', self.CrossEntropy(self.softmax(model_outputs), labels))

old class_loss: tensor(0.8386, device='cuda:0', grad_fn=<NllLossBackward>)

new class_loss: tensor(0.8386, device='cuda:0', grad_fn=<NllLossBackward>)

old class_loss: tensor(0.6758, device='cuda:0', grad_fn=<NllLossBackward>)

new class_loss: tensor(0.6758, device='cuda:0', grad_fn=<NllLossBackward>)

old class_loss: tensor(0.5868, device='cuda:0', grad_fn=<NllLossBackward>)

new class_loss: tensor(0.5868, device='cuda:0', grad_fn=<NllLossBackward>)

old class_loss: tensor(0.5376, device='cuda:0', grad_fn=<NllLossBackward>)

new class_loss: tensor(0.5376, device='cuda:0', grad_fn=<NllLossBackward>)

....

Problem: When I use my own def CrossEntropy() to run the same training procedure, both class_losses are still the same, but the general loss results of my own CrossEntropy(right cloumn below) became different from the training with torch.nn.CrossEntropy() (left column below) from the 5th training steps:

class_loss = self.CrossEntropy(self.softmax(model_outputs), labels)

print('old class_loss: ', self.class_criterion(model_outputs, labels))

print('new class_loss: ', self.CrossEntropy(self.softmax(model_outputs), labels))

Can anyone please let me know why this happens and how could I solve it so that my own def CrossEntropy() could completely reproduce the training results? Thanks a lot!