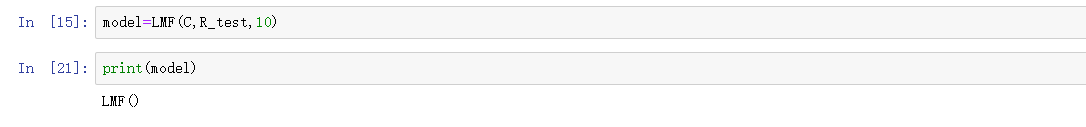

I am trying to build Logistic matrix factorization.When I train my model, I found that my model is empty.I am unfamiliar to PyTorch and I don’t know what causes the error.It really confuse me.Can you give me some suggestions?Thank you!

this is my code

class LMF(nn.Module):

def __init__(self, C, R_test , n_factor):

'''

:param C:

:param R_test:

:param n_factor:

'''

super(LMF, self).__init__()

self.n_factor = n_factor

self.C = torch.tensor(C , dtype=torch.double)

self.R = torch.tensor(R_test , dtype=torch.double)

self.n_user = C.shape[0]

self.n_item = C.shape[1]

self.X = Parameter(torch.randn([self.n_user, self.n_factor], dtype=torch.double))

self.Y = Parameter(torch.randn([self.n_item, self.n_factor], dtype=torch.double))

self.user_biases = Parameter(torch.randn((self.n_user, 1), dtype=torch.double))

self.item_biases = Parameter(torch.randn((self.n_item, 1), dtype=torch.double))

def forward(self):

A=torch.mm(self.X,self.Y.T)

A=A+self.user_biases

A=A+self.item_biases.T

loss=self.C*(A) - (1+self.C)*(torch.log((1+torch.exp(A))))

loss=loss.sum()

return -loss

def MPR(self):

rank = 0.0

R_sum = torch.sum(self.R)

R_hat=torch.mm(self.X , self.Y.T)

R_hat_rank = torch.argsort(torch.argsort(-R_hat, dim=1))

print(self.R.size())

print(R_hat_rank .size())

A = self.R*(R_hat_rank / torch.tensor(self.n_item,dtype=torch.double))

rank = torch.sum(A)/ R_sum

return rank.item()