Hello,

I am a beginner with PyTorch, and I’m working on a small project which consists in predicting some output. To do this I’m using a Neural Network that has a few layers with simple transforms.

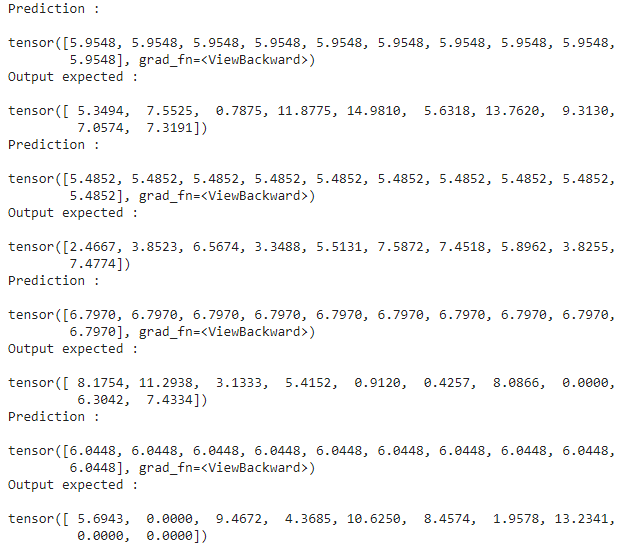

When I try to run my code I’m faced with the following problem : every prediction of my neural network is 0 (sometimes it gives other values in the first batch when I’m lucky enough…)

Here is the code of my project :

import torch

from torch import nn

from torch.utils.data import DataLoader

from torch.utils.data import Dataset

from torchvision.transforms import Lambda, Compose

import matplotlib.pyplot as plt

import numpy as np

n = 20 #Nombre de variables de direction résumant la variance du payoff

d = 20 #Dimension de l'option

N_data_training = 5000 #Taille de la base de donnée utile à l'apprentissage du réseau de neurones

N_data_test = 2500 #Taille de la base de donnée utile au test de la précision du réseau de neurones entrainé

type_product = "basket call" #Type de produit dérivé

S0 = 100 #Spot price

K = 100 #Strike

T = 1 #Maturité

r = 0.05 #Taux d'intérêt sans risque

sigma = 0.2 #Volatilité

# Nmc = 1000 #Nombre de trajectoires générées pour l'estimation de Monte-Carlo

learning_rate = 1e-3

device = "cuda" if torch.cuda.is_available() else "cpu"

print("Using {} device".format(device))

def payoff_derivative(N_data, type_product, d, S0, r, sigma, T): #### pay-offs test

payoff = np.zeros(N_data)

Z = np.zeros((N_data, d))

if type_product == "basket call":

for i in range(N_data):

Z[i, :] = S0*np.exp((r-0.5*sigma**2)*T + sigma*np.sqrt(T)*np.random.randn(d))

payoff[i] = np.maximum((1/d)*np.sum(Z[i, :], axis=0) - K, 0)

return (Z, payoff)

pure_data_training = payoff_derivative(N_data_training, type_product, d, S0, r, sigma, T) ##### pay-offs pour l'apprentissage du réseau de neurones

pure_data_test = payoff_derivative(N_data_test, type_product, d, S0, r, sigma, T) ##### pay-offs pour le test de précision

class Creation_Dataset(Dataset):

def __init__(self, pure_data):

self.Z = pure_data[0]

self.payoff = pure_data[1]

def __len__(self):

return len(self.payoff)

def __getitem__(self, idx):

return torch.tensor(self.Z[idx],dtype=torch.float), torch.tensor(self.payoff[idx],dtype=torch.float)

dataset_training = Creation_Dataset(pure_data_training)

dataset_test = Creation_Dataset(pure_data_test)

# Creation des data loaders

batch_size = 100

train_dataloader = DataLoader(dataset_training, batch_size)

test_dataloader = DataLoader(dataset_test, batch_size)

for X, y in train_dataloader:

print("Shape of X : ", X.shape, X.dtype)

print("Shape of y: ", y.shape, y.dtype)

print(X[0])

print(y[0])

break

class NeuralNetwork(nn.Module):

def __init__(self): ######méthode dans laquelle on définit les couches du réseau

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten() ########## convertit en tenseur 1D

self.linear_relu_stack = nn.Sequential(

nn.Linear(d, n, bias=False),

nn.Linear(n, 6),

nn.ReLU(),

nn.Linear(6, 15),

nn.ReLU(),

nn.Linear(15, 10),

nn.ReLU(),

nn.Linear(10, 5),

nn.ReLU(),

nn.Linear(5, 1),

nn.ReLU()

)

def forward(self, x):

logits = self.linear_relu_stack(x)

return torch.reshape(logits, (-1,))

model = NeuralNetwork().to(device)

print(model)

loss_fn = nn.MSELoss(reduction='sum')

optimizer = torch.optim.SGD(model.parameters(), lr=1e-3)

def train_loop(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

for batch, (X, y) in enumerate(dataloader):

# Compute prediction and loss

print("Shape of X : ", X.shape, X.dtype)

print("Shape of y: ", y.shape, y.dtype)

pred = model(X)

print(pred)

loss = loss_fn(pred, y)

# Backpropagation

optimizer.zero_grad()

loss.backward()

optimizer.step()

if batch % 100 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")

def test(dataloader, model):

model.eval()

test_loss, correct = 0, 0

with torch.no_grad():

for X, y in dataloader:

X, y = X.to(device), y.to(device)

pred = model(X)

test_loss += loss_fn(pred, y).item()

correct += float((pred == y).sum())

size = len(dataloader.dataset)

test_loss /= size

correct /= size

print(f"Accuracy: {100*correct:.2f}%, Avg loss (sqrt(MSE)) : {np.sqrt(test_loss):.3f} \n")

epochs = 2

for t in range(epochs):

print(f"Epoch {t+1}\n-------------------------------")

train(train_dataloader, model, loss_fn, optimizer)

test(test_dataloader, model)

So, using a DataLoader that creates batches of batch_size, I train my Neural Network in the train_loop() function and I print out my predictions’ tensor, and it turns out that all my predictions are zeros. I’ve seen a few topics with people facing this kind of problems with classification problems, but I’m still trying to solve it for predicting correct outputs.

Thank you for your time