Yet i have build the installCuda.sh from NVIDIA yet

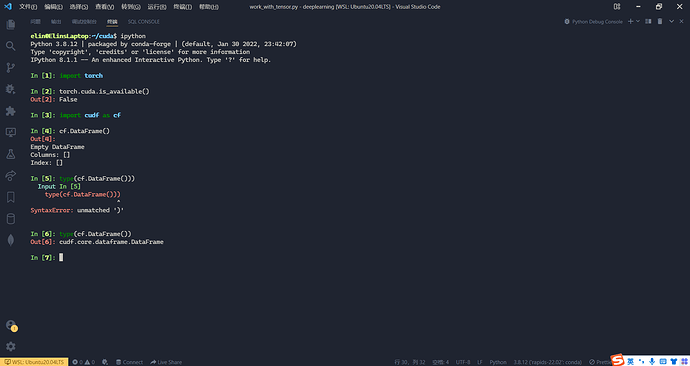

And when i using cuDF(a CUDA GPU acclerated Pandas Lib),it runs well,but torch is not good.

But the weried thing is it works on my Windows Host very well,What?What Hell?

How should fix this bug guys?Plz!

1 Like

You might have installed the CPU-only binary and you could check it with e.g. python -m torch.utils.collect_env. This post had similar issues and managed to pick the right version.

Thanks bud,but after i using your method,it still can not using CUDA.Thats what i recieved

Collecting environment information…

PyTorch version: 1.11.0+cu115

Is debug build: False

CUDA used to build PyTorch: 11.5

ROCM used to build PyTorch: N/A

OS: Ubuntu 20.04.4 LTS (x86_64)

GCC version: (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.31

Python version: 3.9.12 | packaged by conda-forge | (main, Mar 24 2022, 23:25:59) [GCC 10.3.0] (64-bit runtime)

Python platform: Linux-5.10.16.3-microsoft-standard-WSL2-x86_64-with-glibc2.31

Is CUDA available: False

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 2060

Nvidia driver version: 511.65

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Versions of relevant libraries:

[pip3] numpy==1.21.5

[pip3] torch==1.11.0+cu115

[pip3] torchaudio==0.11.0+cu115

[pip3] torchvision==0.12.0+cu115

[conda] cudatoolkit 11.5.1 hcf5317a_9 nvidia

[conda] numpy 1.21.5 py39haac66dc_0 conda-forge

[conda] torch 1.11.0+cu115 pypi_0 pypi

[conda] torchaudio 0.11.0+cu115 pypi_0 pypi

[conda] torchvision 0.12.0+cu115 pypi_0 pypi