Hi Guys

I am doing a project related to neural networks recently.

I was surprised to find that pytorch can calculate the gradient of loss function with quantiles, because the quantile calculation should be non differentiable.

I was asked to elaborate the exact principle of it, why Pytorch can calculate the gradient of loss function with quantiles?

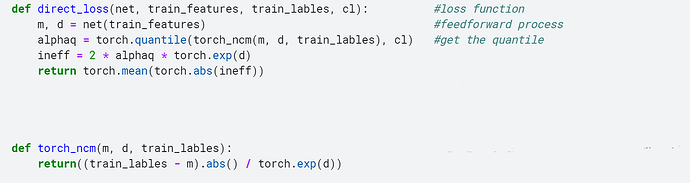

The following is a screenshot of my customized loss function.

Can anyone answer my question?