class torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True)

The default value of dilation = 1, does it means all the conv2d without setting dilation = 0 will use dilation conv?

class torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True)

The default value of dilation = 1, does it means all the conv2d without setting dilation = 0 will use dilation conv?

Dilation = 1 already means “no dilation”: 1-spacing = no gaps. I agree this convention is weird…

If you phrase it as “every dilationth element is used”, it may be easier to remember. When defining dilation (or any op in general) to me it seems natural to talk about what is used/done rather than what is skipped/not done.

So once you try to write down a formula, it probably is with this convention. Using it in code saves you from index juggling when putting formulas into code.

Of course, everyone has a different intuition about these things, but personally, I think the one pytorch implicitly suggests here can be useful.

Best regards

Thomas

dilation is similar to stride. stride=1 is not weird, and hence dilation=1 is not weird either

Ok, please forgive my words, “weird” wasn’t the appropriate formulation. But it can be misunderstood by thinking that ‘=1’ implies there is a dilating dilation, hence the existence of this topic…

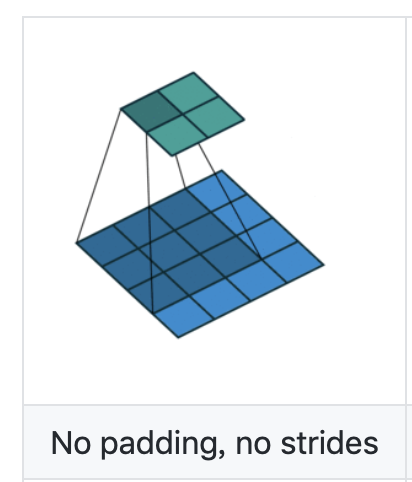

So in PyTorch, which image can represent the correct understanding?

Which image is the correct understanding of dilation value = 1?

stride: step length for moving kernel.

dilation: step length for moving kernel element.

You see the first image has dilation = 2 which means the row and column of the convolving filter is increased by a scale of 2 ( as you can see in the picture; there are 2 additional pixels in the row and columns of the filter).

Now to address the fact that why they keep the default value is 1 (as it should insert a single pixel over a row and column); kernels applied are generally in odd dimension like (3x3, 5x5, 7x7) due to which adding a single pixel in their rows and columns would lead to an unbalanced weighted filter matrix (sure it would be fine for a 2x2 kernel but in general we dont make use of that so it wont).

Now some of you might argue over 'It can be useful in case of Pooling Layer!" ; But you see these layers are just for dimensional reductions which is mostly influeced by the 'kernel_size" and “stride” values. Using Dilation here will lead to a loss of pixel values which may represent important features of our input feature maps.

Hence dilation = 1 (By default) is accepted globally. Well thats how i feel it should be actually!.

Have a Great Day.