I am trying to write a neural network code for which a basic sanity check I tried was to train and validate it using the same set of input data i.e. validation data = train data.

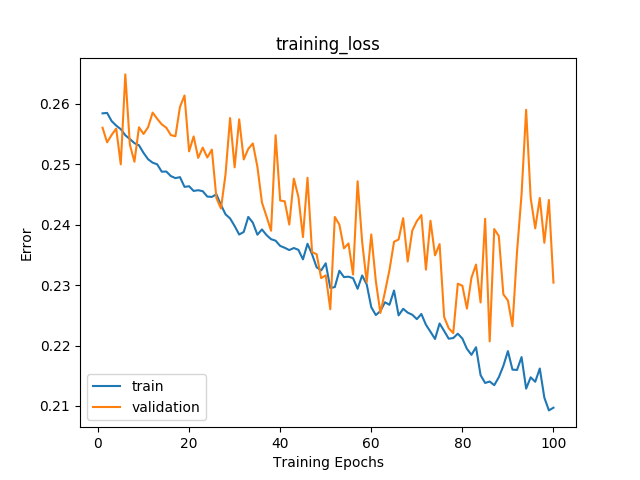

With .eval() active at the validation time the loss output plots like this:

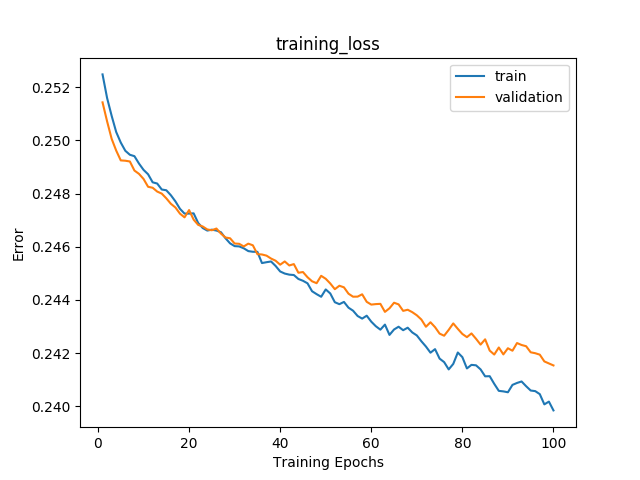

In a similar setting when I run the same code without activating .eval() function i.e. inferring the network in training mode, the loss function seems very smooth and as expected, train and validation loss are pretty much the same:

What might be causing this behavior in a network’s implementation?

My code is written in Pytorch 0.4.0