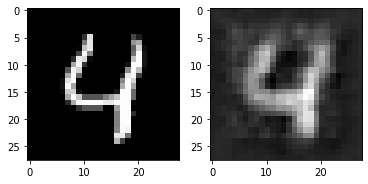

Hello. I train one network and save it, then load this network and I partition network to three module and give one images to input of first module and take output from end module but the output of this three module has low resolution… I dont understand why this happened?!!

model = ConvAutoencoder()

max_epochs =10

outputs = train(model, num_epochs=max_epochs)

torch.save(model, 'model1.pth')

model1 = torch.load('model1.pth')

imgs3, labels = next(iter(test_loader))

f= model1(imgs3)

plt.imshow(f[13][0].detach().numpy(), cmap="gray")

l1 = nn.Sequential(*list(model1.children())[:3])

print('l1=',l1)

l2 = nn.Sequential(*list(model1.children())[3:4])

print('l2=',l2)

l3 = nn.Sequential(*list(model1.children())[4:])

print('l3=',l3)

imgs2, labels = next(iter(test_loader))

y1= l1(imgs2)

#y1.shape

y2=l2(y1)

y3=l3(y2)

plt.subplot(1, 2, 1)

plt.imshow(imgs2[13][0].detach().numpy(), cmap="gray")

plt.subplot(1, 2, 2)

plt.imshow(y3[13][0].detach().numpy(), cmap="gray")