for example:

fc = torch.nn.Linear(128,512)

data1 = torch.randn(4,512,128)

data2 = data1[0:1,200,128)#the length of data is changed

result1 = fc(data1)[0:1,200,:]

result2 = fc(data2)

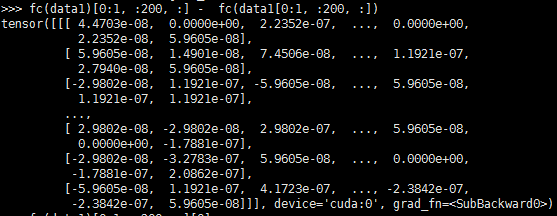

print(result1-result2)#the result1 and result2 should be the same, however they are different in 10^-7

I didn’t find any reason for this result, I hope someone can help me. Thank You.(torch version is 1.1.0)

the result is different in the conditions shown in the following image: