class myModel(nn.Module):

def __init__(self):

super(myModel, self).__init__()

# self.keep_prob=0.9

# self.block_size=7

self.block1 = nn.Sequential(

nn.Conv2d(1, 32, kernel_size=3, padding=1, bias=False),

nn.GroupNorm(4,32,affine=False),

# nn.BatchNorm2d(32, affine=False),

nn.ReLU())

self.bolck2=nn.Sequential(...)

self.bolck3=nn.Sequential(...)

self.bolck4=nn.Sequential(...)

self.bolck5=nn.Sequential(...)

self.bolck6=nn.Sequential(...)

self.bolck7=nn.Sequential(...)

self.block1.apply(weights_init)

self.block2.apply(weights_init)

self.block3.apply(weights_init)

self.block4.apply(weights_init)

self.block5.apply(weights_init)

self.block6.apply(weights_init)

self.block7.apply(weights_init)

return

def forward(self, input,keep_prob=1.0):

x = self.block1(self.input_norm(input))

# x = DropBlock2D(keep_prob)(x)

x = self.block2(x)

# x = DropBlock2D(keep_prob)(x)

x = self.block3(x)

# x = DropBlock2D(keep_prob)(x)

x = self.block4(x)

x = self.block5(x)

# x = DropBlock2D(keep_prob)(x)

x = self.block6(x)

x = DropBlock2D(keep_prob=keep_prob)(x)

x = self.block7(x)

# x_features=self.features(input)

x = x.view(x.size(0), -1)

return L2Norm()(x)

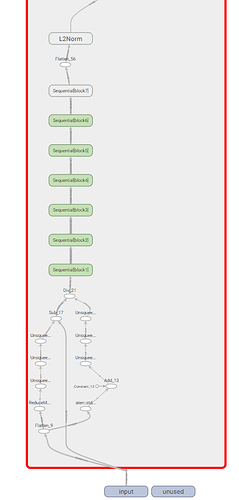

writer = SummaryWriter(log_dir=args.tensorboardx_log, comment='Hardnet')

dumpy_input = torch.zeros((1, 1, 32, 32))

temp_input = Variable(dumpy_input)

dumpy_keep_prob=Variable(torch.tensor([1]))

writer.add_graph(model, (temp_input,dumpy_keep_prob))

When I use the tensorboadX to save the model graph,

But the submodel

DropBlock2D does not appear in the graph, where I am wrong.Thanks in advance.