I came into sth that really strange, this is my code

def model_eval(model):

model_the_wrong_images = []

model_the_real_label = []

model_the_pred = []

correct = 0

total = 0

with torch.no_grad():

model.eval()

for idx, test in enumerate(test_loader):

image, label = test

total += image.size()[0]

pred = torch.argmax(model(image), 1)

correct += (pred == label).sum().item()

if idx == 4:

#print(model(image))

print(torch.argmax(model(image), 1))

print(pred)

print(torch.argmax(model(image), 1))

print(pred == torch.argmax(model(image), 1))

print(correct)

print(label)

for i in range(len(image)):

if not (pred == label)[i].item():

model_the_wrong_images.append(image[i])

model_the_real_label.append(label[i])

model_the_pred.append(torch.argmax(model(image[i].unsqueeze_(0)), 1))

print(correct)

print(total)

print('The accuracy on testset is : %f'%(correct / total))

return model_the_wrong_images, model_the_real_label, model_the_pred

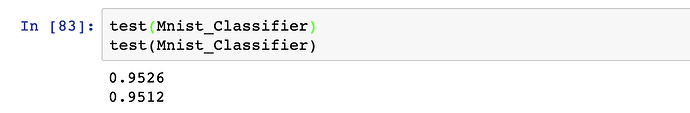

I write this func to eval my model, but i found that once i repeat the eval process with the model that has already been trained, the accuracy changes everytime.

Why my model’s params can’t keep const??? I wrote that model.eval(), but it seems no work

So i print the output and found the output change.Go futrher, i print the output of the 4th subset of the test process to see, something terrible happen, the outputs of the if are:

tensor([9, 7, 2, 4])

tensor([9, 7, 3, 4])

tensor([9, 7, 3, 4])

tensor([1, 1, 1, 1], dtype=torch.uint8)

17

tensor([9, 7, 3, 4])

How could the pred and torch.argmax(model(image), 1) become two different things?I get pred by pred = torch.argmax(model(image), 1)!

Who can tell me why! Thanks a lot!!!