I am new to PyTorch, I was using FastAi to solve some simple problems and now I want to learn PyTorch. I followed the procedure of and successfully loaded my dataset’s training and testing parts in data loader. I’ve applied some transformations as well before feeding to data loaders.

Now, I want to display the batch of images before feeding to the Neural Networks. I’ve tried every possible solution to display the batch of images but no luck yet.

I followed this article as well but still getting errors. Why there is not a simple method like Fastai to display batch images by one line of code?

Can anyone guide me to use a method to display grid/batch images using PyTorch?

Thank you in advance.

Whats the error that you are getting?

At the very least you could comeup with something like this :

def visualize_imgs(imgs, labels, row=3, cols=11,):

imgs = imgs.detach().numpy().transpose(0, 2,3,1)

# now we need to unnormalize our images.

fig = plt.figure(figsize=(20,5))

for i in range(imgs.shape[0]):

ax = fig.add_subplot(row, cols, i+1, xticks=[], yticks=[])

# if your images are 1 channel only, remove the channel dimension

# so that matplotlib can work with it (e.g. change 28x28x1 to 28x28!)

# like this ax.imshow(imgs[i].squeeze(), cmap='gray')

ax.imshow(imgs[i])

ax.set_title(labels[i].item())

plt.show()

this should work as well, you may need to change it based on your needs, for example the rows, cols need to be set based on you batchsize. if you have normalized the inputs, unnormalize them as well.

I am trying like this.

transf = transforms.Compose([transforms.Resize(255),

transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))

])

trainloader = torch.utils.data.DataLoader(train_dataset, batch_size = 8, shuffle=True )

data_iter = iter(trainloader)

images, labels = next(data_iter)

fig, axes = plt.subplots(figsize = (10,4), ncols=4)

for ii in range(4):

ax = axes[ii]

helper.imshow(images[ii], ax=ax, normalize=False)

But getting this error

RuntimeError: invalid argument 0: Sizes of tensors must match except in dimension 0. Got 406 and 513 in dimension 3 at c:\a\w\1\s\tmp_conda_3.6_091443\conda\conda-bld\pytorch_1544087948354\work\aten\src\th\generic/THTensorMoreMath.cpp:1333

The error seems to point to the DataLoader, which cannot stack tensors of different spatial shapes.

Are you sure you are using the posted transformation?

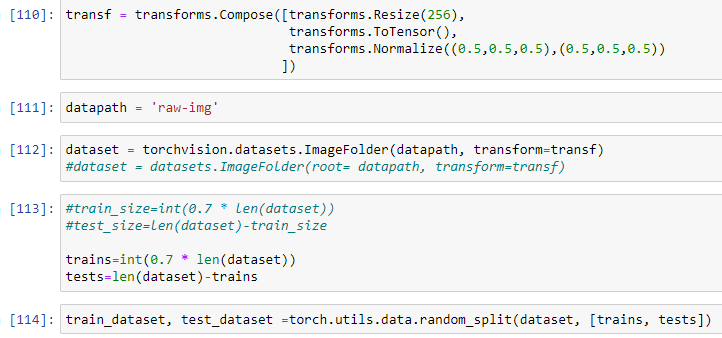

Yes, I am using the same transformations as I posted. Below is the screenshot of it.

I tried using transforms.Resize(256,256) also, but again it ends with an error which says I am using the value two times.

The error claims the height of the images is different as:

Got 406 and 513 in dimension 3

so it seems the Resize is not used at all.

Could you post the complete stack trace? Maybe the error isn’t raised by the DataLoader at all.

Here is the complete code.

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

import torch

import torchvision

#import torchvision.transforms as transforms

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

import numpy as np

import helper

transf = transforms.Compose([transforms.Resize(255),

transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))

])

datapath = 'raw-image2'

dataset = datasets.ImageFolder(datapath, transform=transf)

trains=int(0.7 * len(dataset))

tests=len(dataset)-trains

train_dataset, test_dataset =torch.utils.data.random_split(dataset, [trains, tests])

trainload = torch.utils.data.DataLoader(train_dataset, batch_size=8, shuffle=True)

testload = torch.utils.data.DataLoader(test_dataset, batch_size = 8, shuffle=True)

trainload = torch.utils.data.DataLoader(train_dataset, batch_size = 8, shuffle=True )

testload = torch.utils.data.DataLoader(test_dataset, batch_size = 8, shuffle=True)

data_iter = iter(trainload)

images, labels = next(data_iter)

fig, axes = plt.subplots(figsize = (10,4), ncols=4)

for ii in range(4):

ax = axes[ii]

helper.imshow(images[ii], ax=ax, normalize=False)

This code I am using but no luck.

Unfortunately, I cannot debug more without seeing the actual stacktrace or any more debugging information about the images etc.

Your code works using larger images as seen here:

transf = transforms.Compose([transforms.Resize(255),

transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))

])

dataset = datasets.FakeData(size=100, image_size=(3, 320, 480), transform=transf)

trains=int(0.7 * len(dataset))

tests=len(dataset)-trains

train_dataset, test_dataset = torch.utils.data.random_split(dataset, [trains, tests])

trainload = torch.utils.data.DataLoader(train_dataset, batch_size=8, shuffle=True)

testload = torch.utils.data.DataLoader(test_dataset, batch_size = 8, shuffle=True)

data_iter = iter(trainload)

images, labels = next(data_iter)

fig, axes = plt.subplots(figsize = (10,4), ncols=4)

for ii in range(4):

ax = axes[ii]

ax.imshow(images[ii].permute(1, 2, 0))