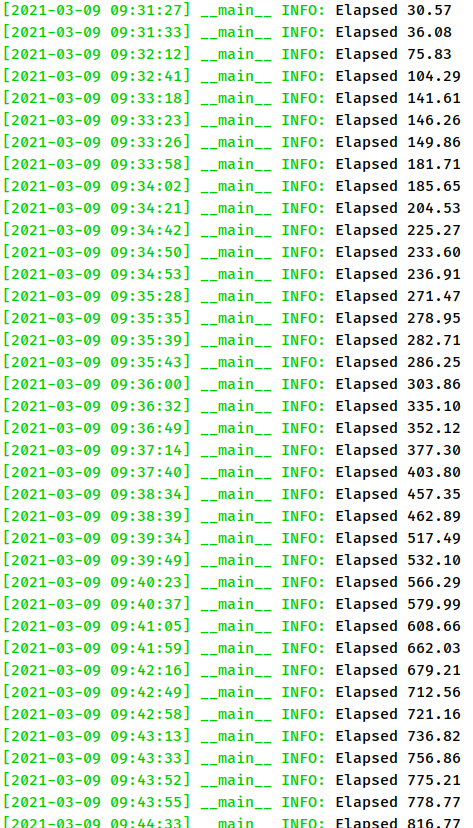

I am training a pytorch model and I remark that the time steps is increasing as mentioned below:

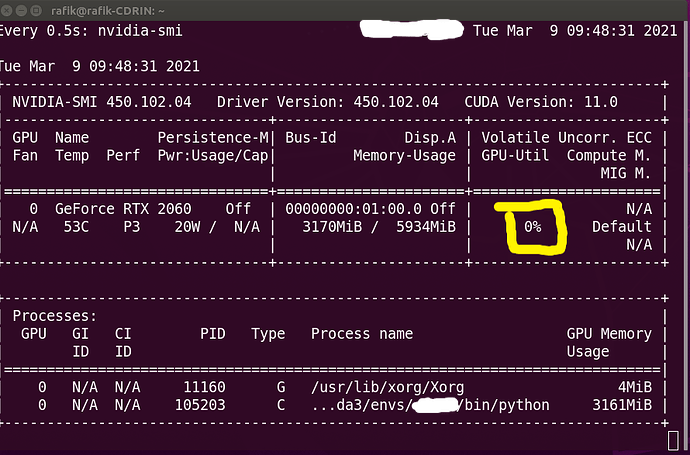

I remark as well that my GPU is 0% use

Here is the transform that I am using:

def get_transform(config):

scale = torchvision.transforms.Lambda(lambda x: x.astype(np.float32) / 255.)

identity = torchvision.transforms.Lambda(lambda x: x)

size = config.transform.mpiifacegaze_face_size

if size != 448:

resize = torchvision.transforms.Resize((size, size))

else:

resize = identity

if config.transform.mpiifacegaze_gray:

to_gray = torchvision.transforms.Lambda(lambda x: cv2.cvtColor(

cv2.equalizeHist(cv2.cvtColor(x, cv2.COLOR_BGR2GRAY)), cv2.

COLOR_GRAY2BGR))

else:

to_gray = identity

transform = torchvision.transforms.Compose([

resize,

to_gray,

torchvision.transforms.Lambda(lambda x: np.transpose(x, [2, 0, 1])),

scale,

torch.from_numpy,

torchvision.transforms.Normalize(mean=[0.406, 0.456, 0.485],

std=[0.225, 0.224, 0.229]),

])

return transform