Hello!

I’m trying to understand what the torch.Tensor.cuda() is doing in detail.

I thought its to move the tensor from CPU memory to GPU memory, and CPU performs store operations to the GPU memory without involving the GPU (please correct me if its wrong).

import torch

import torch.distributed as dist

import torchvision.models as models

from torch.profiler import profile, record_function, ProfilerActivity

inputs = torch.randn(1000)

with profile(activities=[ProfilerActivity.CPU, ProfilerActivity.CUDA], record_shapes=True) as prof:

inputs = inputs.cuda()

print(prof.key_averages().table(sort_by="cuda_time_total", row_limit=10))

This is the code that I’m profiling. It’s simply create a tensor with 1000 float numbers, and move it to the GPU.

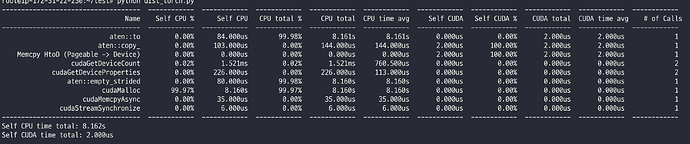

And here is the profile result.

I actually observe some CUDA kernels are running, and GPU utilization is not 0% (different from my expectation). Could anyone let me know what I’m misunderstanding here?