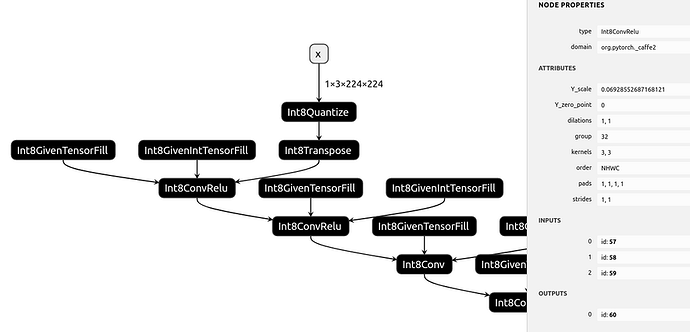

I see that pytorch use onnx internally to transport quantized model(using pytorch quantization api) to caffe2. And I can export this internal quantized model representation. Like below:

As we can see, all operators in the model are custom op which can directly transport to caffe2, but this is not that flexible for using this quantized model as exchange format.

AFAIK, Tensorflow can export QAT model that contains FakeQuant Op, and transport the model to TFLite. In my opinion, we can export a quantized model that only contains FakeQuant Op(in ONNX Custom Op) and Standard ONNX Ops. This make the quantized model more flexible.

What’s your opinion about it? Thanks.