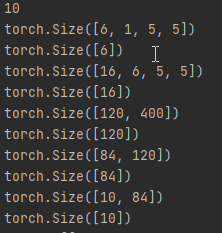

This is the net code and the result of test:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__() # 初始化基类

self.conv1 = nn.Conv2d(1, 6, (5, 5))

self.conv2 = nn.Conv2d(6, 16, (5, 5))

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

# Max pooling over a (2, 2) window

x = self.conv1(x)

x = f.relu(x)

x = f.max_pool2d(x, (2, 2))

# If the size is a square you can only specify a single number

x = self.conv2(x)

x = f.relu(x)

x = f.max_pool2d(x, (2, 2))

x = x.view(-1, self.num_flat_features(x))

x = f.relu(self.fc1(x))

x = f.relu(self.fc2(x))

x = self.fc3(x)

return x

def num_flat_features(self, x):

size = x.size()[1:] # all dimensions except the batch dimension

num_features = 1

for s in size:

num_features *= s

return num_features

"创建网络"

net = Net()

params = list(net.parameters())

print(len(params))

for param in range(10):

print(params[param].size()) # print parameters

I want to know the structure of the parameters

however the results show that each relu will introduce a parameter tensor , why?