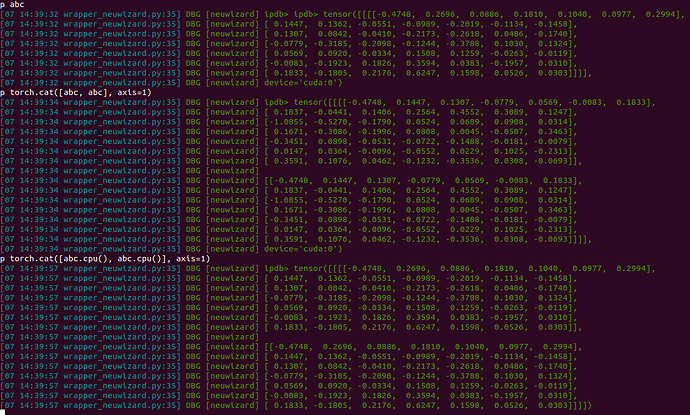

While trying to inference by a pretrained pytorch model, I found a strange phenomena that torch.cat may fail to get right answer, as shown in figure.

when abc is a tensor in GPU, torch.cat([abc,abc],axis=1) may running in a weird way.

but when abc is set to CPU, the answer would be correct.

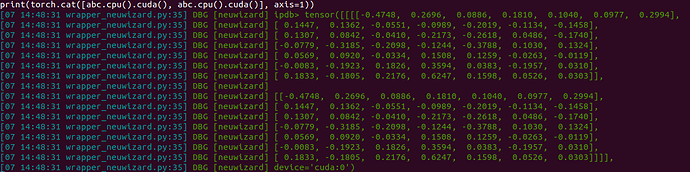

and when I try abc.cpu().cuda(), the answer would be correct again.

I can’t understand why it happend.

Could you post a minimal, executable code snippet as well as the output of python -c torch.utils.collect_env, please?

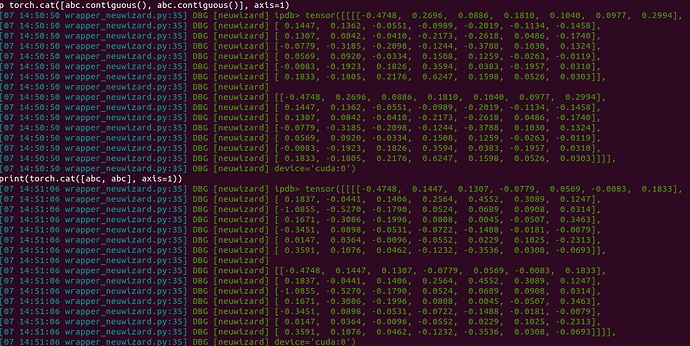

Sorry I can’t reproduce this error by a minimal, executable code, the error comes from a big project. But I suspect that the error comes from uncontiguous memory, since there’s some tensor.permute in the code.

I further tested tensor.contiguous(), and found that this could solve it. as shown in figure.

env informations are as follows.

PyTorch version: 1.8.1+cu102

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.3 LTS (x86_64)

GCC version: (Ubuntu 7.4.0-1ubuntu1~18.04.1) 7.4.0

Clang version: Could not collect

CMake version: version 3.10.2

Python version: 3.6 (64-bit runtime)

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: GeForce RTX 2080 Ti

Nvidia driver version: 460.32.03

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Versions of relevant libraries:

[pip3] numpy==1.18.1

[pip3] pytorchcv==0.0.66

[pip3] torch==1.8.1

[pip3] torchfile==0.1.0

[pip3] torchnet==0.0.4

[pip3] torchvision==0.9.1

[conda] numpy 1.18.1 pypi_0 pypi

[conda] pytorchcv 0.0.66 pypi_0 pypi

[conda] torch 1.8.1 pypi_0 pypi

[conda] torchfile 0.1.0 pypi_0 pypi

[conda] torchnet 0.0.4 pypi_0 pypi

[conda] torchvision 0.9.1 pypi_0 pypi

Could you update PyTorch to the latest stable or nightly release and check if you are still hitting the issue?

I tested with the same config on pytorch 1.10.2 and it works well. The bug disappeared.

It seems that this issue has been solved in some version? Could you please help me check in which version of pytorch this bug is fixed? Thanks a lot!