Hi PyTorch community,

TL;DR

Sometimes torch.Size contains elements of type torch.Tensor when using shape or size() . Why?

# x:torch.Tensor [1, 40, 32, 32]

tsize = x.shape[2:]

print(tsize)

> torch.Size([32, 32])

print(tsize[0])

> tensor(32)

type(tsize[0])

> torch.Tensor

Details:

I am trying to trace graph and quantize a LiteHRNet model to run with Vitis AI hardware. In principle, the problem I have is in the following snippet that uses nn.Functional.adaptive_avg_pool2d as following:

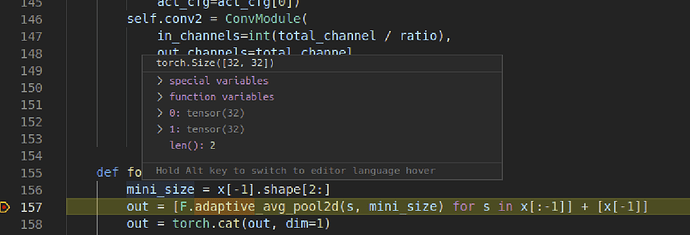

def forward(self, x):

mini_size = x[-1].shape[2:]

out = [F.adaptive_avg_pool2d(s, mini_size) for s in x[:-1]] + [x[-1]]

out = torch.cat(out, dim=1)

I noticed that when using x.shape or x.size() on some of the tensors, the returned size is torch.Size(tensor(32), tensor(32)) instead of torch.Size(32,32).

The behavior gets weirder in debugging. when I put a breakpoint on the line with shape computation. and then step ahead, it returns the correct type torch.Size(32,32).

But if I break at the line with adaptive_avg_pool2d, the size returned is torch.Size(tensor(32), tensor(32)) where elements are int tensors rather than int.

(Context) This causes downstream problems when you convert pytorch modules and ops to the ones supported by Vitis AI, where adaptive_avg_pool2d expects output_size in int