Hi - The 2d convolution of PyTorch has the default value of dilation set to 1. This means I have to use dilation. Why this is set up in this way? If I want to convolve an image with a [3 x 3] kernel, the default setting of dilation is making the kernel effectively a [5 x 5] one. Is there any way to use a kernel without dilation?

Hi,

It’s not true.

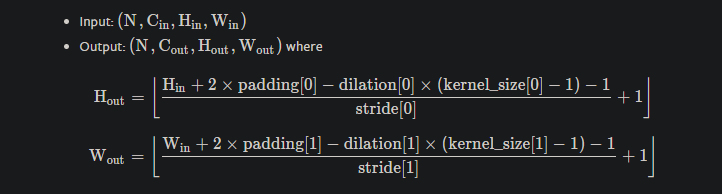

You may want to check the formulation Conv2d — PyTorch 1.6.0 documentation:

Also, this is the convention, as far as I know, TF, keras, etc all use conv layers the same way PyTorch uses as it should match mathematical formulation of conv.

Bests

Hi @Nikronic - Thanks for your reply. I’m aware of the convolutional convention. As per the PyTorch documentation dilation add spaces between the kernel elements. So it’s effectively inflating the kernel size.

Getting back to my main point, is there any way to convolve an image without dilation in PyTorch? Some of early papers (specifically 2012-2016) on ConvNet, authors just used pain convolution. I want to recreate some of the papers. That’s why I need to convolve an image with out dilation. Any help will be appreciated.

Thanks,

Tomojit

Apparently, I expressed the idea badly.

Default value, dilation=1 means no dilation.

I think this thread explains situation clearly:

Why the default dilation value in Conv2d is 1? - PyTorch Forums

Ok, it’s looks like dilation=1 means “no dilation”, which is a bit confusing. Anyway, if I use the default setting for dilation, then I’m not convolving an image with a dilated kernel.

For anyone having issues with this you can see dilation as the number of steps taken between the kernel weights. So if dilation were to be 0 it would stay at one spot.