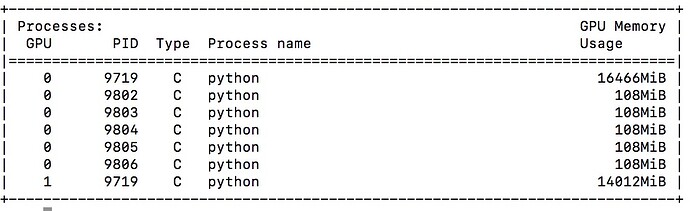

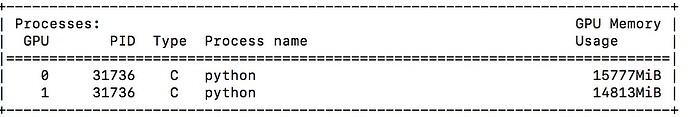

The 2 figures are from two machine with same GPU, driver, pytorch and code (perhaps the batch size is different). But the gpu processes are different. The number of “small” process in first figure changed with num_workers in dataloader. The main problem is that the training program in first figure always cost several times more than the second one’s. Why do the “small” processes appear in ‘nvidia-smi’

I meet this problem too, have you fix it out?

If your dataset returns cuda tensors, then you should expect these processes to take ~100MB on the first cuda device they see as they need to create cuda context.

Do you mean that I return cuda tensors in my dataset? but I do not do this when creating the data,I just wrap the data tensors in cuda before they enter the network. So do you knew how to fix it?