I have the code written for part highlighted in blue, need to add the red part.

class BiDir(torch.nn.Module):

def init(self, weights, emb_dim, hid_dim, rnn_num_layers=2):

super().init()

#Embedding layers using glove as the pretrained weights

self.embedding = nn.Embedding.from_pretrained(weights)

#Bidirectional GRU module for forward pass with 2 hidden layers

self.rnn = torch.nn.GRU(emb_dim, hid_dim, bidirectional=True, num_layers=rnn_num_layers)

self.l1 = torch.nn.Linear(hid_dim * 2 * rnn_num_layers, 256)

self.l2 = torch.nn.Linear(256, 2)

def forward(self, samples):

#Forward Pass

embedded = self.embedding(samples)

_, last_hidden = self.rnn(embedded)

hidden_list = [last_hidden[i, :, :] for i in range(last_hidden.shape[0])]

#Calculating the loss

encoded = torch.cat(hidden_list, dim=1)

#RELU and Sigmoid Activation Function

encoded = torch.nn.functional.relu(self.l1(encoded))

encoded = torch.sigmoid(torch.FloatTensor(self.l2(encoded)))

return encoded

Double post or related to this post.

Are you working on the same problem and where are you stuck implementing the red part?

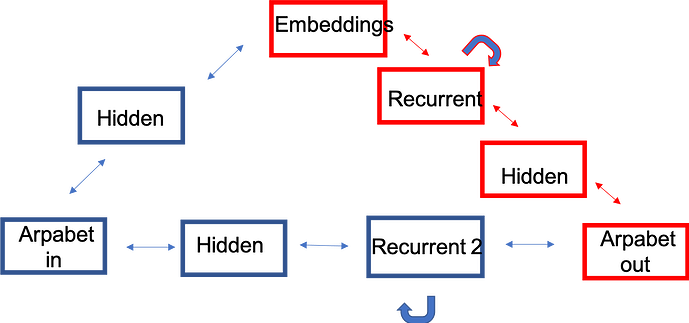

The OP is actually trying to help me out with this problem. However, the post is actually backwards ( i think). The objective is to create a neural network as pictured in the original image. I was able to code the portion of the network that is outlined in red; however, I would like to add the portion in blue. My main problem is that I am so new to pytorch that I don’t know how to actually do it. Hence, me recruiting help, and finding it here ;).

To the best of my ability I created a model that utilizes word embeddings for an input and number sequences as output. The number sequences are coded in a manner in which they represent the word that corresponds to the embedding. The input and output are connected by two hidden layers, one of which is recurrent.

The language utilized in the image that reflects the current network I have created follows this:

Embeddings <-> recurrent <-> hidden <-> arpabet_out

What my goal is now to add the remaining structure of the intended network which would look like this:

Embeddings <-> hidden <-> Arpabet_in AND

arpaet_in <-> hidden <-> recurrent <-> arpabet_out

Please let me know if I can provide any more information. Should it be easier to look at all the code; I have this on my original post in which you linked.

It is hard to understand what is it that you’re having problem with, as it is easy to combine two tensors, e.g.: 1) sum them 2)torch.cat 3)trainable combination, simplest looks like input1.matmul(weight1) + input2.matmul(weight2)

Maybe you mean multi-stage training, in this case you can use Tensor.detach() on output from pre-trained part of the network to “freeze” it. You can then combine normal and detached tensors anyway you need to.

Hm, Conceptually it seems like the multi-stage training might be the way to go. This is my first every pytorch model, and I just picked up python in January so what is “easy” for some, is likely dependent on experience  .

.

I trained this network in a different NN language, but am really pushing to get it to work so that I can capitalize on the large dataset GLoVE provides. Do you have any recommendations for an example model that uses multi-stage training?

class BiDir(torch.nn.Module):

def **init** (self, weights, emb_dim, hid_dim, rnn_num_layers=2):

super(). **init** ()

[/quote]

Sorry, no tutorial-like examples come to mind, as such models are usually big with a lot of model-specific details. But it seems this is a common question in this forum (when people ask about “freezing layers”, “combining models” and such, variants of staged training are implied), see this topic for example.

Simplest implementation would look like (if I understood your intent correctly):

output = red_network(input)

if stage2:

output = combine_tensors(output.detach(), blue_network(input))

filtering optimizer params or changing their requires_grad to False achieves similar effect.

but note that in this form blue_network may be learning red_network errors (residuals), I don’t understand how you want to change your network loss with blue_network addition… If you use output = blue_network(input, red_network(input)), that’s residual network, if your blue_output and red_output must be independently useful, you want “model combining” instead.

.

.