Update, the problem is solved.

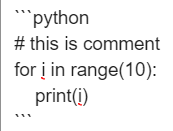

if you encounter with the same issue, try using .contiguous() as following example from @ptrblck

Hi all,

I did slight change on a nn by adding a max_pool1d and get error traceback

Traceback (most recent call last):

File "../../learn/training.py", line 212, in train

loss.backward()

File "C:\Users\Veid\Anaconda3\envs\caml\lib\site-packages\torch\tensor.py", line 195, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File "C:\Users\Veid\Anaconda3\envs\caml\lib\site-packages\torch\autograd\__init__.py", line 99, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: fractional_max_pool2d_backward_out_cuda failed with error code 0

How can I correct this right?

I’m new with torch so I apologize in advance if this is a stupid question.

The original code snippet that works successfully

#x shape is torch.Size([8, k, 400]) where k is an unfixed number

#U.weight shape is torch.Size([50, 400])

alpha = F.softmax(self.U.weight.matmul(x.transpose(1,2)), dim=2)

#alpha shape is torch.Size([8, 50, k])

m = alpha.matmul(x)

#m shape is torch.Size([8, 50, 400])

#final.weight shape is torch.Size([50, 400])

y = self.final.weight.mul(m).sum(dim=2).add(self.final.bias)

#y shape is torch.Size([8, 50])

The code snippet after changing that fails to autograd

#x shape is torch.Size([8, k, 400]) where k is an unfixed number, 8 is the batch size

#U.weight shape is torch.Size([50, 400])

x= F.max_pool1d(x.transpose(1,2), kernel_size=x.size()[1])

#after max pooling, x shape is torch.Size([8, 400, 1])

alpha = self.U.weight.mul(x.transpose(1,2))

#alpha shape is torch.Size([8, 50, 400])

#final.weight shape is torch.Size([50, 400])

y = self.final.weight.mul(alpha).sum(dim=2).add(self.final.bias)

#y shape is torch.Size([8, 50])

Here is the runable code that I extracted related Variable defination and loss computation part. But the bug can not be reproduced in this

setting. ![]()

import torch.nn as nn

import torch

import torch.nn.functional as F

from torch.nn.init import xavier_uniform_

from torch.autograd import Variable

target=Variable(torch.randn(8,50))

U = nn.Linear(400, 50)

xavier_uniform_(U.weight)

final = nn.Linear(400, 50)

xavier_uniform_(final.weight)

x = Variable(torch.randn(8,123,400))

'''

#The code snippet that works successfully

alpha = F.softmax(U.weight.matmul(x.transpose(1,2)), dim=2)

m = alpha.matmul(x)

y = final.weight.mul(m).sum(dim=2).add(final.bias)

'''

'''

#The code snippet that fails to autograd

x= F.max_pool1d(x.transpose(1,2), kernel_size=x.size()[1])

alpha = U.weight.mul(x.transpose(1,2))

y = final.weight.mul(alpha).sum(dim=2).add(final.bias)

'''

yhat= y

loss = F.binary_cross_entropy_with_logits(yhat, target)

loss.backward()

print(y.size())