High, I’ve trained a multi-classification model with MNIST-like image and I’ve reach 90% accuaracy on validation after 200 epoch. but when It comes to inference, my model just predict the images randomly. The images is exactly same as images in validation set. here you can find my network architecturehttps://discuss.pytorch.org/t/linear-activation-function/158275

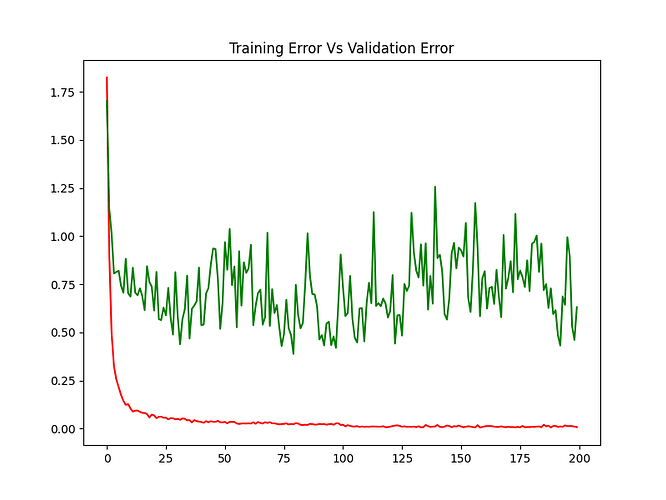

This is my training error

here is my code:

net: Block

class Block(nn.Module):

def __init__(self, in_channels, out_channels, exp=1, stride=1, type=''):

super(Block, self).__init__()

self.t = type

self.stride = stride

self.inc, self.outc = in_channels, out_channels

self.exp = exp

self.blockc = nn.Sequential(

nn.Conv2d(self.inc, self.inc* self.exp, kernel_size=1),

nn.BatchNorm2d(self.inc * self.exp),

nn.ReLU6(inplace=True),

nn.Conv2d(self.inc * self.exp, self.inc * self.exp, kernel_size=3, groups= self.inc * self.exp, stride= self.stride, padding=1),

nn.BatchNorm2d(self.inc * self.exp),

nn.ReLU6(inplace=True),

nn.Conv2d(self.inc * self.exp, self.outc, kernel_size=1),

nn.BatchNorm2d(self.outc))

def forward(self, x):

out = self.blockc(x)

if self.t == 'A':

out = torch.add(out,x)

return out

Overall Network

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv2d1 = nn.Sequential(nn.Conv2d(in_channels=1, out_channels=8, kernel_size=3,padding=1, stride=2),

nn.BatchNorm2d(8),

nn.ReLU6(inplace=True))

self.stage1 = nn.Sequential(

Block(8, 8, exp=1, stride=2, type='C'))

self.stage2 = nn.Sequential(

Block(8, 16, exp=2, stride=2, type='C'),

Block(16, 16, exp=2, type='A'))

self.stage3 = nn.Sequential(

Block(16, 24, exp=2, stride=2, type='C'),

Block(24, 24, exp=2, type='A'))

self.post_block2 = nn.Sequential(

Block(24, 32, exp=2, type='B'))

self.gap = nn.AdaptiveAvgPool2d((1,1))

self.drop = nn.Dropout()

self.head =nn.Sequential(

nn.Linear(32, 10))

def forward(self, x):

out = self.conv2d1(x)

out = self.stage1(out)

out = self.stage2(out)

out = self.stage3(out)

out = self.post_block2(out)

out = self.gap(out)

out = out.view(-1, 32)

out = self.drop(out)

out = self.head(out)

return out

Is there any wrong with the code?

I’m working on it for a week, any idea would so helpful. thanks