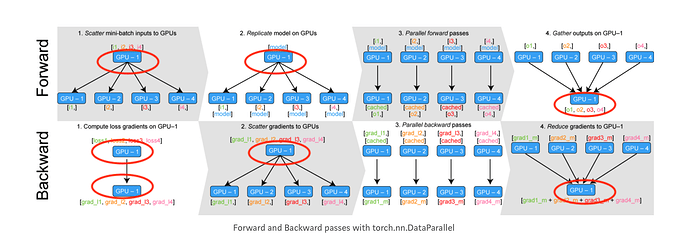

Hm, but I still want to use all available GPUs. E.g., to better illustrate what I mean, let me use this DataParallel illustration from Medium

Basically, in the ones that I circled, I want this to be e.g., GPU-2 not GPU-1. Theoretically, this should be possible by just setting device=torch.device('cuda:1')

and then using

.to(device)for the model and the data tensors.- As well as the

output_device=deviceinsideDataParallel

PS: I am using PyTorch 0.4.1, that’s the most recent one I think (except for the release candidates)