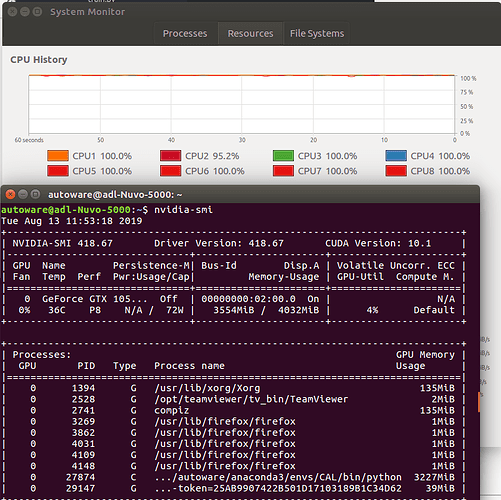

I am training a model in GPU (80% load). But my CPU is at 100% load (all time). I want to know whether there exist command to limit the CPU usage. (Like restricting the core count, percentage…)

Reduce the num_workers used when you create your dataloader.

num_workers (int, optional) – how many subprocesses to use for data loading. 0 means that the data will be loaded in the main process. (default: 0)

https://pytorch.org/docs/stable/data.html#torch.utils.data.DataLoader

1 Like

What model are you training?

Recurrent model… GRU.

The issues was due to DataLoader as @jGsch said.