Thank you so much for such a quick reply ptrblck! this is my model definition

class VGG_5(nn.Module):

"""

The following is an implementation of the lasagne based binarized VGG network, but with floating point weights

"""

def __init__(self):

super(VGG_5, self).__init__()

# need some pretrained help!

graph = models.vgg11(pretrained=True)

graph_layers = list(graph.features)

for i, layer in enumerate(graph_layers):

print('{}.'.format(i), layer)

drop_rate = 0.5

activator = nn.Tanh()

self.feauture_exctractor = nn.Sequential(

nn.Conv2d(in_channels=5, out_channels=64, kernel_size=3, padding=1),

nn.MaxPool2d(kernel_size=2),

nn.BatchNorm2d(num_features=64, eps=1e-4, momentum=0.2),

# nn.ReLU(),

activator,

nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, padding=1),

nn.MaxPool2d(kernel_size=2),

nn.BatchNorm2d(num_features=64, eps=1e-4, momentum=0.2),

# nn.ReLU(),

activator,

nn.Dropout2d(drop_rate),

# nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, padding=1),

graph_layers[3], # pretrained on imagenet

nn.BatchNorm2d(num_features=128, eps=1e-4, momentum=0.2),

# nn.ReLU(),

activator,

nn.Conv2d(in_channels=128, out_channels=128, kernel_size=3, padding=1),

nn.MaxPool2d(kernel_size=2),

nn.BatchNorm2d(num_features=128, eps=1e-4, momentum=0.2),

# nn.ReLU(),

activator,

nn.Dropout(drop_rate),

# nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, padding=1),

graph_layers[6], # pretrained on imagenet

nn.MaxPool2d(kernel_size=2),

nn.BatchNorm2d(num_features=256, eps=1e-4, momentum=0.2),

# nn.ReLU(),

activator,

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, padding=1),

nn.MaxPool2d(kernel_size=2),

nn.BatchNorm2d(num_features=256, eps=1e-4, momentum=0.2),

# nn.ReLU(),

activator,

nn.Dropout2d(drop_rate),

)

self.fc = nn.Sequential(

nn.Linear(in_features=256 * 2 * 2, out_features=512),

nn.BatchNorm1d(num_features=512, eps=1e-4, momentum=0.2),

# nn.ReLU(),

activator,

nn.Dropout(drop_rate),

nn.Linear(in_features=512, out_features=512),

nn.BatchNorm1d(num_features=512, eps=1e-4, momentum=0.2),

# nn.ReLU(),

activator,

nn.Linear(in_features=512, out_features=10),

# nn.BatchNorm1d(num_features=10),

)

pass

def forward(self, *input):

x, = input

x = self.feauture_exctractor(x)

x = x.view(-1, 256*2*2)

x = self.fc(x)

return x, torch.argmax(input=x, dim=1)

so no F.anything() and here is my entire testing code (the one that runs here is the outermost else)

@torch.no_grad()

def eval_net(**kwargs):

model = kwargs['model']

cuda = kwargs['cuda']

device = kwargs['device']

if cuda:

model.cuda(device=device)

if 'criterion' in kwargs.keys():

writer = kwargs['writer']

val_loader = kwargs['val_loader']

criterion = kwargs['criterion']

global_step = kwargs['global_step']

correct_count, total_count = 0, 0

net_loss = []

model.eval() # put in eval mode first ############################

print('evaluating with batch size = 1')

for idx, data in enumerate(val_loader):

test_x, label = data['input'], data['label']

if cuda:

test_x = test_x.cuda(device=device)

label = label.cuda(device=device)

# forward

out_x, pred = model.forward(test_x)

loss = criterion(out_x, label)

net_loss.append(loss.item())

# get accuracy metric

if kwargs['one_hot']:

batch_correct = (torch.argmax(label, dim=1).eq(pred.long())).double().sum().item()

else:

batch_correct = (label.eq(pred.long())).double().sum().item()

correct_count += batch_correct

total_count += np.float(pred.size(0))

#################################

mean_accuracy = correct_count / total_count * 100

mean_loss = np.asarray(net_loss).mean()

# summarize mean accuracy

writer.add_scalar(tag='val. loss', scalar_value=mean_loss, global_step=global_step)

writer.add_scalar(tag='val. over_all accuracy', scalar_value=mean_accuracy, global_step=global_step)

print('$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$')

print('log: validation:: total loss = {:.5f}, total accuracy = {:.5f}%'.format(mean_loss, mean_accuracy))

print('$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$')

else:

# model, images, labels, pre_model, save_dir, sum_dir, batch_size, lr, log_after, cuda

pre_model = kwargs['pre_model']

base_folder = kwargs['base_folder']

batch_size = kwargs['batch_size']

log_after = kwargs['log_after']

criterion = nn.CrossEntropyLoss()

un_confusion_meter = tnt.meter.ConfusionMeter(10, normalized=False)

confusion_meter = tnt.meter.ConfusionMeter(10, normalized=True)

model.load_state_dict(torch.load(pre_model))

print('log: resumed model {} successfully!'.format(pre_model))

_, _, test_loader = get_dataloaders(base_folder=base_folder, batch_size=batch_size)

net_accuracy, net_loss = [], []

correct_count = 0

total_count = 0

print('batch size = {}'.format(batch_size))

model.eval() # put in eval mode first

for idx, data in enumerate(test_loader):

# if idx == 1:

# break

# print(model.training)

test_x, label = data['input'], data['label']

# print(test_x)

# print(test_x.shape)

# this = test_x.numpy().squeeze(0).transpose(1,2,0)

# print(this.shape, np.min(this), np.max(this))

if cuda:

test_x = test_x.cuda(device=device)

label = label.cuda(device=device)

# forward

out_x, pred = model(test_x)

loss = criterion(out_x, label)

un_confusion_meter.add(predicted=pred, target=label)

confusion_meter.add(predicted=pred, target=label)

###############################

# pred = pred.view(-1)

# pred = pred.cpu().numpy()

# label = label.cpu().numpy()

# print(pred.shape, label.shape)

###############################

# get accuracy metric

# correct_count += np.sum((pred == label))

# print(pred, label)

# get accuracy metric

if 'one_hot' in kwargs.keys():

if kwargs['one_hot']:

batch_correct = (torch.argmax(label, dim=1).eq(pred.long())).double().sum().item()

else:

batch_correct = (label.eq(pred.long())).sum().item()

# print(label.shape, pred.shape)

# break

correct_count += batch_correct

# print(batch_correct)

total_count += np.float(batch_size)

net_loss.append(loss.item())

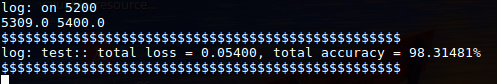

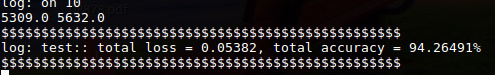

if idx % log_after == 0:

print('log: on {}'.format(idx))

#################################

mean_loss = np.asarray(net_loss).mean()

mean_accuracy = correct_count * 100 / total_count

print(correct_count, total_count)

print('$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$')

print('log: test:: total loss = {:.5f}, total accuracy = {:.5f}%'.format(mean_loss, mean_accuracy))

print('$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$$')

with open('normalized.pkl', 'wb') as this:

pkl.dump(confusion_meter.value(), this, protocol=pkl.HIGHEST_PROTOCOL)

with open('un_normalized.pkl', 'wb') as this:

pkl.dump(un_confusion_meter.value(), this, protocol=pkl.HIGHEST_PROTOCOL)

pass

pass

I can’t see any problem with this thing.