I’ve created a module that contains two classes, a NetDictionary class that inherits from dict(), and a Net class that inherits from nn.Module. The NetDictionary constructor arguments and doc string are shown below:

def __init__(self, network_count, test_tensor, total_labels, import_export_filename, **kwargs):

"""

Initialize a dictionary of randomly structured CNNs to test various network configurations.

args: network_count: number of networks to generate

test_tensor: a tensor that can be used to construct network layers

total_labels: the number of labels being predicted for the networks

import_export_filename: if file exists on initialization, the information in the

file will be used to reconstruct a prior network.

kwargs: optimizers: list of tuples of form (eval strings for optimizer creation, label)

first_conv_layer_depth

max_conv_layers

min_conv_layers

max_kernel_size

min_kernel_size

max_out_channels

min_out_channels

init_linear_out_features

linear_feature_deadband

max_layer_divisor

min_layer_divisor

"""

When initialized, the NetDictionary class builds a randomized list of eval strings corresponding to module functions and a randomized list of eval strings for the parameterizations of those functions subject to the constraints in kwargs and passes that information, along with the test_tensor, to the Net constructor. The Net constructor then builds an nn.ModuleList and a list of nn.functional calls for use in the forward layer using the python eval() function, and the resulting network is saved in the ‘dict’ of NetDictionary. This process is repeated ‘network_count*(len(optimizers))’ times (see doc string above).

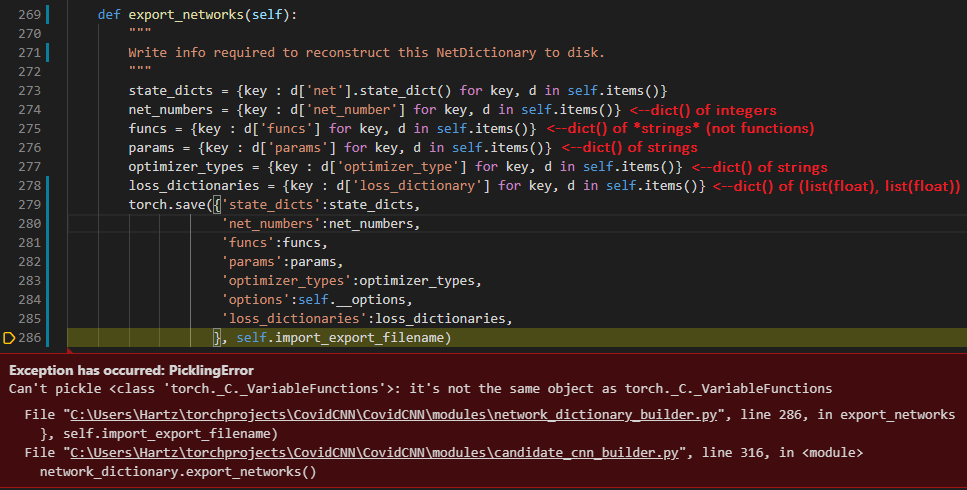

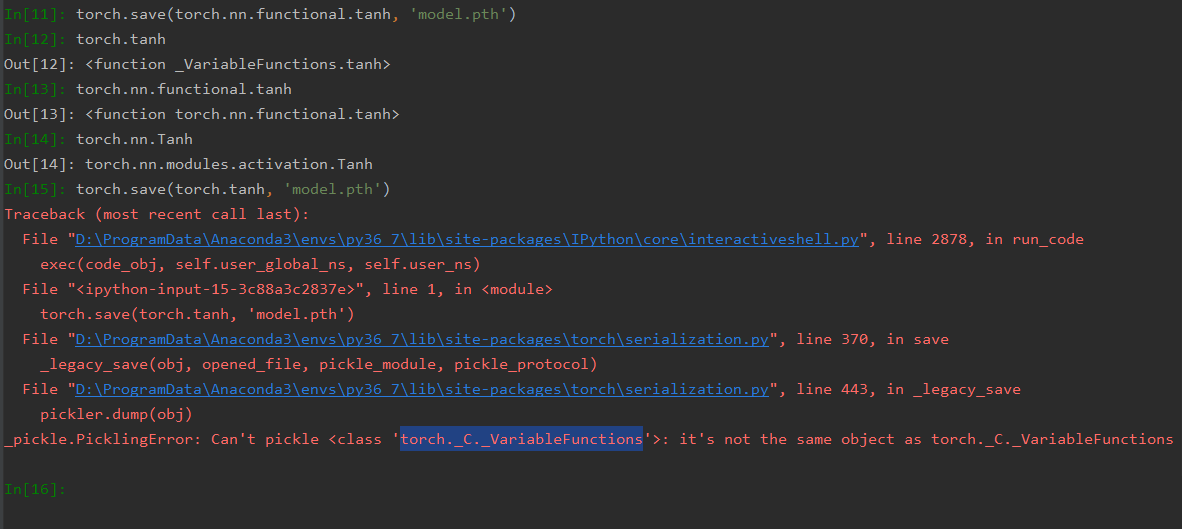

Perhaps surprisingly, all of that works like a charm. I’ve generated a set of 300 randomized networks with various optimizers and trained and validated against my dataset, recording a loss dictionary along the way. The problem comes in AFTER the training and validation when I call a function in NetDictionary that attempts to call torch.save() on a dictionary containing the state_dicts() of every Net in NetDictionary along with some additional objects containing nothing but native python types as shown here alongside the error:

I’ve tried just exporting the dict back to my main python script and pickling there as well, but the same issue occurs. I’m currently working around it, but in the long run, I’ll HAVE to be able to save these networks to disk. Any advice would be appreciated.

NOTE: I’m not a python expert so any advice for providing additional stack trace or other debug information would be appreciated as well. I’m using vscode via anaconda and an export of my environment is shown below for reference. The source code for the module can be found here.

name: torch

channels:

- defaults

dependencies:

- _pytorch_select=1.1.0=cpu

- astroid=2.4.2=py37_0

- attrs=19.3.0=py_0

- backcall=0.1.0=py37_0

- blas=1.0=mkl

- bleach=3.1.4=py_0

- ca-certificates=2020.6.24=0

- certifi=2020.6.20=py37_0

- cffi=1.14.0=py37h7a1dbc1_0

- colorama=0.4.3=py_0

- console_shortcut=0.1.1=4

- cycler=0.10.0=py37_0

- decorator=4.4.2=py_0

- defusedxml=0.6.0=py_0

- entrypoints=0.3=py37_0

- freetype=2.9.1=ha9979f8_1

- icc_rt=2019.0.0=h0cc432a_1

- icu=58.2=ha925a31_3

- imageio=2.8.0=py_0

- importlib_metadata=1.5.0=py37_0

- intel-openmp=2020.1=216

- ipykernel=5.1.4=py37h39e3cac_0

- ipython=7.13.0=py37h5ca1d4c_0

- ipython_genutils=0.2.0=py37_0

- ipywidgets=7.5.1=py_0

- isort=4.3.21=py37_0

- jedi=0.17.0=py37_0

- jinja2=2.11.2=py_0

- joblib=0.15.1=py_0

- jpeg=9b=hb83a4c4_2

- jsonschema=3.2.0=py37_0

- jupyter_client=6.1.3=py_0

- jupyter_core=4.6.3=py37_0

- kiwisolver=1.2.0=py37h74a9793_0

- lazy-object-proxy=1.4.3=py37he774522_0

- libpng=1.6.37=h2a8f88b_0

- libsodium=1.0.16=h9d3ae62_0

- libtiff=4.1.0=h56a325e_0

- m2w64-gcc-libgfortran=5.3.0=6

- m2w64-gcc-libs=5.3.0=7

- m2w64-gcc-libs-core=5.3.0=7

- m2w64-gmp=6.1.0=2

- m2w64-libwinpthread-git=5.0.0.4634.697f757=2

- markupsafe=1.1.1=py37he774522_0

- matplotlib=3.1.3=py37_0

- matplotlib-base=3.1.3=py37h64f37c6_0

- mccabe=0.6.1=py37_1

- mistune=0.8.4=py37he774522_0

- mkl=2020.1=216

- mkl-service=2.3.0=py37hb782905_0

- mkl_fft=1.0.15=py37h14836fe_0

- mkl_random=1.1.0=py37h675688f_0

- msys2-conda-epoch=20160418=1

- nbconvert=5.6.1=py37_0

- nbformat=5.0.6=py_0

- ninja=1.9.0=py37h74a9793_0

- notebook=6.0.3=py37_0

- numpy=1.18.1=py37h93ca92e_0

- numpy-base=1.18.1=py37hc3f5095_1

- olefile=0.46=py37_0

- openssl=1.1.1g=he774522_0

- pandas=1.0.1=py37h47e9c7a_0

- pandoc=2.2.3.2=0

- pandocfilters=1.4.2=py37_1

- parso=0.7.0=py_0

- pickleshare=0.7.5=py37_0

- pillow=7.0.0=py37hcc1f983_0

- prometheus_client=0.7.1=py_0

- prompt-toolkit=3.0.4=py_0

- prompt_toolkit=3.0.4=0

- pycparser=2.20=py_0

- pygments=2.6.1=py_0

- pylint=2.5.3=py37_0

- pyodbc=4.0.30=py37ha925a31_0

- pyparsing=2.4.7=py_0

- pyqt=5.9.2=py37h6538335_2

- pyrsistent=0.16.0=py37he774522_0

- python=3.7.6=h60c2a47_2

- python-dateutil=2.8.1=py_0

- pytz=2020.1=py_0

- pywin32=227=py37he774522_1

- pywinpty=0.5.7=py37_0

- pyzmq=18.1.1=py37ha925a31_0

- qt=5.9.7=vc14h73c81de_0

- scikit-learn=0.22.1=py37h6288b17_0

- scipy=1.4.1=py37h9439919_0

- seaborn=0.10.0=py_0

- send2trash=1.5.0=py37_0

- setuptools=46.4.0=py37_0

- sip=4.19.8=py37h6538335_0

- six=1.14.0=py37_0

- sqlite=3.31.1=h2a8f88b_1

- terminado=0.8.3=py37_0

- testpath=0.4.4=py_0

- tk=8.6.8=hfa6e2cd_0

- toml=0.10.1=py_0

- tornado=6.0.4=py37he774522_1

- tqdm=4.46.0=py_0

- traitlets=4.3.3=py37_0

- typed-ast=1.4.1=py37he774522_0

- vc=14.1=h0510ff6_4

- vs2015_runtime=14.16.27012=hf0eaf9b_2

- wcwidth=0.1.9=py_0

- webencodings=0.5.1=py37_1

- wheel=0.34.2=py37_0

- widgetsnbextension=3.5.1=py37_0

- wincertstore=0.2=py37_0

- winpty=0.4.3=4

- wrapt=1.11.2=py37he774522_0

- xz=5.2.5=h62dcd97_0

- zeromq=4.3.1=h33f27b4_3

- zipp=3.1.0=py_0

- zlib=1.2.11=h62dcd97_4

- zstd=1.3.7=h508b16e_0

- pip:

- future==0.18.2

- hamiltorch==0.3.1.dev1

- pip==20.1.1

- termcolor==1.1.0

- torch==1.5.0+cpu

- torchsummary==1.5.1

- torchvision==0.6.0+cpu

Thanks,

Sam H.