Hi,

I am trying to train an existing neural network from a published paper, using custom dataset.

However, why trainng this I am getting NAN as my predictions even before completeing the first batch of training (batch size = 32).

I tried to google out the error and came across multiple post from this forum and tried few things -

- Reducing the learning rate (default was 0.001, reduced it to 0.0001)

- Reducing batch size from 32 to 10

- Using

torch.autograd.detect_anomaly()

by using torch.autograd.detect_anomaly() I got the following error

Warning: Traceback of forward call that caused the error:

File "train_classification.py", line 126, in <module>

pred, trans, trans_feat = classifier(points)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in __call__

result = self.forward(*input, **kwargs)

File "/content/mydrive/My Drive/Projects/pointnet/pointnet.pytorch/pointnet/model.py", line 147, in forward

return F.log_softmax(x, dim=1), trans, trans_feat

File "/usr/local/lib/python3.6/dist-packages/torch/nn/functional.py", line 1317, in log_softmax

ret = input.log_softmax(dim)

(print_stack at /pytorch/torch/csrc/autograd/python_anomaly_mode.cpp:57)

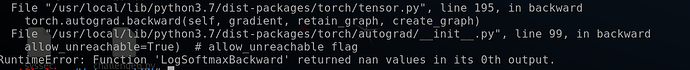

Traceback (most recent call last):

File "train_classification.py", line 133, in <module>

loss.backward()

File "/usr/local/lib/python3.6/dist-packages/torch/tensor.py", line 195, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File "/usr/local/lib/python3.6/dist-packages/torch/autograd/__init__.py", line 99, in backward

allow_unreachable=True) # allow_unreachable flag

RuntimeError: Function 'LogSoftmaxBackward' returned nan values in its 0th output.

If I am interpreting this correctly, the error seems to be from the softmax layer - F.log_softmax()

Now I am stuck here, do not know how to proceed, can someone help me in this regards?