I am trying to use 3 GPU in a single machine using `nn.DataParallel using following way -

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

if torch.cuda.device_count() > 1:

print("Let's use", torch.cuda.device_count(), "GPUs!")

model_transfer = nn.DataParallel(model_transfer,device_ids=range(torch.cuda.device_count()))

model_transfer.to(device)

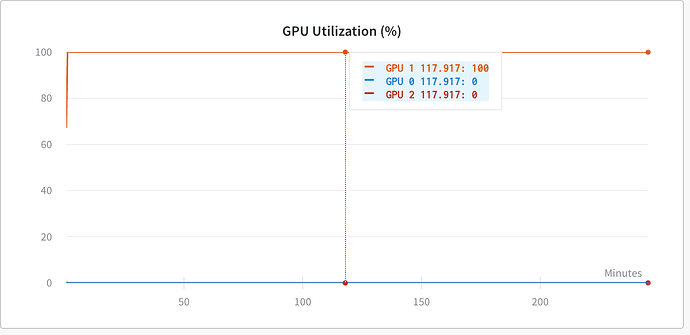

I am getting uneven GPU utilization, i.e GPU1 : 100% and rest of them 0%.

Where I am doing wrong?

GPU memory utilization is same on all of them-

On searching further, I come across this answer Uneven GPU utilization during training backpropagation - #14 by colllin

Now, the thing is they are using CriterionParallel(loss) for custom class, how to do it for normal dataloader class