Hello everyone,

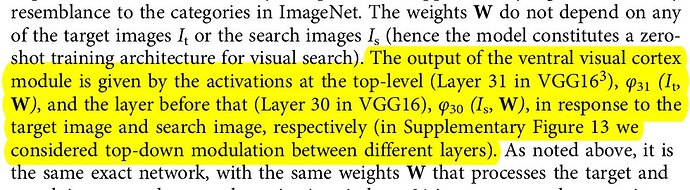

I’m new to torch/PyTorch, and I’m currently trying to translate a script in Lua + torch into Python + PyTorch. The script in question implements a visual search model from a paper, and it can be found here.

The model that’s used is Caffe VGG16, but it’s loaded through torch.

Since it’s visual search, there are two different nn used: one for the stimuli (the image to be explored) and one for the target (the object to be found). They’re both very similar: the target model only has one extra layer (MaxPool2d, with kernel size 2x2 and stride 2). Both wrap VGG16 into an nn.Sequential container.

Here’s how they look:

Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

In Python, I’m using torchvision to load the VGG16 model. Up to the point where the models are fed by the input (line 105 in the Lua script quoted above), I’ve succesfully recreated every step. Everything is precisely the same: the dimensions and data.

However, I’ve encountered two different problems:

-I had to cast the tensors to 32-bit floats before feeding it to the nn, a step that’s not necessary in the Lua script (where they use 64-bit float tensors).

-The error described in the title.

Now, I know this error arises when you use an image that’s below the minimum size required by the model. And that’s precisely it: the stimuli model is fed a 224x224 image, which doesn’t raise any errors; the target model is fed a 28x28 image, which does raise an error.

The catch is: it doesn’t raise any errors in the Lua script, even though it’s a 28x28 image.

I’ve seen this thread, where ptrblck mentions that wrapping a model into an nn.Sequential container may cause some problems.

If anyone can give me a hint of why that’s so, I’d greatly appreciate it.

Here’s how I load the model and manipulate it (it’s not pretty):

import torchvision.models as models

import torch.nn as nn

# Load the model

model = models.vgg16(pretrained=True)

# Ignore first module since it's the net itself

layers = [module for module in model.modules()][1:]

stimuliLayers = list(layers[0])[:-1]

targetLayers = list(layers[0])

model_stimuli = nn.Sequential(*stimuliLayers)

model_target = nn.Sequential(*targetLayers)