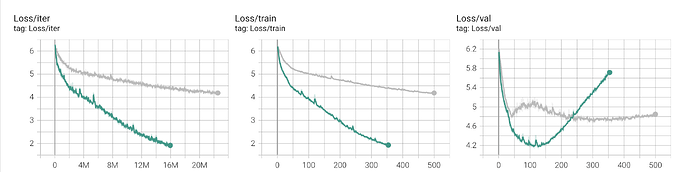

I’m working on a classification problem (500 classes). My NN has 3 fully connected layers, followed by an LSTM layer. I use nn.CrossEntropyLoss() as my loss function. I noticed that my model was overfitting (validation loss increased after some epochs) and hence I used a dropout (p=0.4) in the last FCN layer. I’m now seeing a higher loss (for both train and val)

The green line denotes training without dropout

Is this behavior justified or am I doing something wrong?