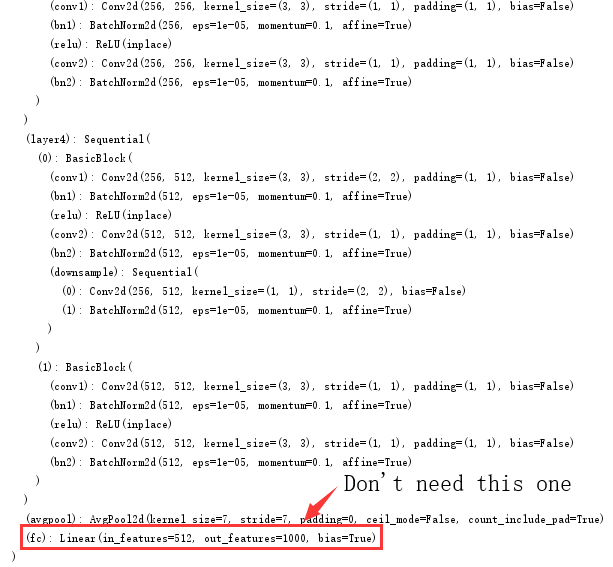

I am using the pre-trained model of vgg16 through torchvision. Now I don’t need the last layer (FC) in the network. How should I remove it?

Don’t you need this layer at all, i.e. do you want to get the avgpool back from your model?

If so, you could createn a module returning its input:

class Identity(nn.Module):

def __init__(self):

super(Identity, self).__init__()

def forward(self, x):

return x

model = models.resnet18(pretrained=False)

model.fc = Identity()

x = torch.randn(1, 3, 224, 224)

output = model(x)

print(output.shape)

From the code it looks like you are using a resnet, so I used it in my examples.

However, usually you would like to use another linear layer with your number of classes as its output.

model.fc = nn.Linear(512, num_classes)

Thank you very much.

PS:In my example, what is needed is the output of avgpool, no need for FC at all.

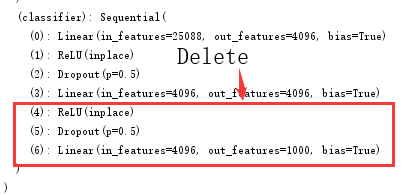

I found I put a wrong figure… If I use vgg16 and want to delete some layers,what should I do?

In this case you could use the following code:

model.classifier = nn.Sequential(*[model.classifier[i] for i in range(4)])

print(model.classifier)

EDIT:

Alternatively, you can also call .children, since the range indexing might be cumbersome for large sequential models.

model.classifier = nn.Sequential(*list(model.classifier.children())[:-3])

Sorry but why did my model say ‘ResNet’ object has no attribute ‘classifier’

Try to use .fc attribute Because Resnet doesn’t have classifier (with dropout in it), the above is vgg net @cad8bd801dfbc87c5d0f

using this approach however, I found specifying different learning for different layers in optimizer quite hard, which is very common in transfer learning. Can you please show how to achieve that in simpler ways? Thanks

Could you post your model architecture and which learning rates you would use for which layers?

In resnet18, I wanted to simply replace ‘fc’ final layer, something like:

model.fc = nn.Linear(512,10)

Now if I wanted to do:

param_groups = [

{'params':model.fc.parameters(),'lr':.001},

{'params':model.others.parameters(),'lr':.0001},

]

optimizer = Adam(param_groups)

Finding model.others.parameters() part seemed hard. I could probably replace it with params array below:

params = []

for child in list(model.children())[:-1]:

params.extend(list(child.parameters()))

Or define a class overriding nn.Module, which I did in the end. I was just wondering if there’s any elegant one liner, nothing big deal Thanks.

Your current code looks good!

If the “filtering” would be a bit complicated, you could use Python filter to remove the model.fc layer by name, but in your use case I’m not sure there is a faster or more elegant way of passing others to the optimizer.

‘classifier’ is a key of the layer.

(classifier): Sequential(

…

)

So, you can refer to a sequence of a specific layer by its key.

Perhaps, ResNet has a different key name to VGG in its implementation.

@cad8bd801dfbc87c5d0f

Sorry if this question seems trivial; why can’t we simply use:

model.classifier=model.classifier[:-1]

Why is the Sequential line necessary?

Your assignment might actually work and I’m not sure, if the nn.Sequential wrapper was necessary when I’ve written the code.

I think I just wanted to make sure the modules don’t get messed up somehow.

Anyway, I’ve tested your line of code using vgg16 and it seems to work perfectly, so you can just stick to your approach.

I tried to delete only the avgpool of the resnet but i got always mismatch errors. To avoid this error I replaced

resnet.avgpool = nn.AvgPool2d(kernel_size=7, stride=1, padding=0)

by

resnet.avgpool = nn.AvgPool2d(kernel_size=1, stride=1, padding=0)

and then

resnet.fc = nn.Linear(512x7x7,number_of_classes).

It is not the optimized way to deal with the problem. Do you have a better solution please?

nn.AvgPool2d with a kernel size of 1 acts like an identity layer, your model basically got rid of the pooling layer.

Alternatively, you could just define the resnet.avgpool as a “real” custom Identity layer:

class Identity(nn.Module):

def __init__(self):

super(Identity, self).__init__()

def forward(self, x):

return x

model.avgpool = Identity()

model.fc = nn.Linear(512*7*7, nb_classes)

Both approaches will yield the same result.

Hi @ptrblck,

If we want to delete some sequenced layers in pretrained model, How could we do? for example in renet assume that we just want first three layers with fixed weights and omit the rest, I should put Identity for all layers I do not want? not any other way?

Not necessarily.

If you would like to keep the forward method without overriding it, replacing a few layers with nn.Identity layers might be the fastest approach.

However, if you would like to just use a few specific layers, I would recommend to override the class and write your custom model or alternatively reuse these layers in your custom model by passing them to your model.

Dear

Thank you very much for your help with the layer removal information, this helped me to fine tune in squeezenet.

Regards

@ptrblck

is there any way that I can remove specific filters in pretrained model on vgg16(the base of faster rcnn) ? I want to prune vgg16 filter wise, so I need to remove specific filters in a layer and as a consequence in the next layer too.

thanks