I am trying to cut inception v3 at mixed_5d as suggested in this paper.

Unfortunately I’m still confused, which way is recommended/possible: Is it possible to “stop” the forward function after mixed_5d and if yes, why wouldn’t I want that?

Thank you very much, I’m really desperate…

I don’t know if in earlier versions of PyTorch the following works, but in v1.6 deleting a layer is as simple as:

del model.fc

This both removes the layer from model.modules and model.state_dict.

This is also does not create zombie layers, as an Identity layer would do. Simplifying model loading.

I think as @ptrblck mentioned here.

“While the .fc module was removed, it’s still used in the forward method, which will raise [an] error.”

But I’m curious as well,

Would something like

model.classifier = None or model.fc = None

works the same as

model.classifier = nn.Identity() or model.fc = nn.Identity()?

Setting the module to None would still remove it, but might break the forward method for the same reason:

model = models.resnet18()

x = torch.randn(1, 3, 224, 224)

out = model(x)

model.fc = None

out = model(x)

> TypeError: 'NoneType' object is not callable

hello dear

how can I remove some layer of net from alexnet model? in pytorch

I am wondering if there exists a cleaner way to do this. Sometimes the model is complex one, and one can not use sequential model.

Keras has a nice way to handle this by using layer.output and layer.input. One can simply connect the output of one layer to the input of another and compile the model.

It seems a bit werid that pytorch does not provide a similar way to achieve this.

Probably the cleanest way to manipulate the usage of the layers and thus the forward execution would be to override the forward method and return the desired (intermediate) outputs.

Hi, everyone

I end up with this network (modified from alexnet). how to get 0-11 (exclude the last maxpool) ?

Sequential(

(0): Sequential(

(0): Conv2d(3, 64, kernel_size=(11, 11), stride=(4, 4), padding=(2, 2))

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(64, 192, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(4): ReLU(inplace=True)

(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(192, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace=True)

(8): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace=True)

(10): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

)

thank you

I think you can do model[0][:12].

I don’t know why, but as per your output, Sequential is inside Sequential, so you have to double index, first [0] to get into sequential, than [:12] to get 0-11 layers.

Is this method true,I try it ,it works,I need your suggestions,

class ResNetModify(ImageClassificationBase):

def init(self):

super().init()

self.network = models.resnet18(pretrained=True)

#Remove last two layers then added flatten layer

self.network = nn.Sequential(*list(self.network.children())[:-2],nn.Flatten())

#Input from previous layer [-3]

num_ftrs=32768

#added fully connected layer with output number of classes

self.network.fc = nn.Linear(num_ftrs, len(train_ds.classes))

def forward(self, xb):

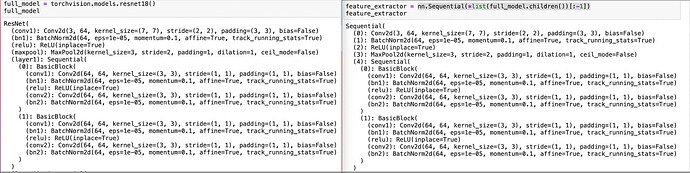

return torch.sigmoid(self.network(xb))Is there a way to keep the names from the original model? I want to use the resent18 as it is and just remove the fc layer. For resnet18, your solution becomes model = nn.Sequential(*list(full_model.children())[:-1]), but this removes the names. Do you think there is any way to use named_children to keep the whole structure of the original resnet, but without the last layer?

See screenshot attached for comparison. I did not capture all layers in the screenshot - the model is big.

To remove the last layer only, you could replace it with nn.Identity, which wouldn’t change any names:

model.fc = nn.Identity()

Thank you! I thought there might be a way to just chop off the fc later, but this is good too.

model = torch.nn.Sequential(*list(model.children())[:-3])

it work’s for me in resnet18 thank you it soesn’t have any classifier part

Would using nn.Identity() have the same results as creating the Identity class?

Yes, my code snippet was just written before PyTorch added nn.Identity as a supported module and you don’t have to define it manually anymore.

The nn.Identity module has an advantage of accepting any optional arguments, which will just be ignored and could be useful for some use cases.

How can I remove the flatten at my vgg16 model?

import torchvision.models as models

import torch.nn as nn

model = models.vgg16(weights='IMAGENET1K_V1')

model.classifier = nn.Sequential(*list(model.classifier.children())[:-7])

model.avgpool = nn.Sequential(*list(model.avgpool.children())[:-1])

I want to remove the classifier layer, and the (avgpool): AdaptiveAvgPool2d layer.

My problem is that –as you said– my output will be flatten here; which I don’t want.

What would you suggest to avoid flattening the output at the end?

If you want to remove the torch.flatten operation you could either create a custom model deriving from VGG16 and override the forward or you could try to warp the needed modules into a new nn.Sequential container which would also remove all functional API calls.

As you said:

To remove the last layer only, you could replace it with nn.Identity, which wouldn’t change any names:

model.fc = nn.Identity()

=======

Or in case I want to remove the middle layer?

You can replace any layer with an nn.Identity module, but especially layers “in the middle” of the model might need additional changes if the original layer was changing the shape of the input.

E.g. imagine you want to replace an nn.Conv2d(64, 128, 5) which will change the channel dimension as well as the spatial size of the activation due to the lack of padding. Replacing this layer with an nn.Identity could easily break the model since the following layer would receive an invalid activation shape.