I am doing classification challenge, i want to ensemble different CNN models to improve the accuracy of the classification. i have see the similar question https://discuss.pytorch.org/t/custom-ensemble-approach/52024 but i stil feel confuse.

this is one of the answer:

class MyModelA(nn.Module):

def __init__(self):

super(MyModelA, self).__init__()

self.fc1 = nn.Linear(10, 2)

def forward(self, x):

x = self.fc1(x)

return x

class MyModelB(nn.Module):

def __init__(self):

super(MyModelB, self).__init__()

self.fc1 = nn.Linear(20, 2)

def forward(self, x):

x = self.fc1(x)

return x

class MyEnsemble(nn.Module):

def __init__(self, modelA, modelB):

super(MyEnsemble, self).__init__()

self.modelA = modelA

self.modelB = modelB

self.classifier = nn.Linear(4, 2)

def forward(self, x1, x2):

x1 = self.modelA(x1)

x2 = self.modelB(x2)

x = torch.cat((x1, x2), dim=1)

x = self.classifier(F.relu(x))

return x

# Create models and load state_dicts

modelA = MyModelA()

modelB = MyModelB()

# Load state dicts

modelA.load_state_dict(torch.load(PATH))

modelB.load_state_dict(torch.load(PATH))

model = MyEnsemble(modelA, modelB)

x1, x2 = torch.randn(1, 10), torch.randn(1, 20)

output = model(x1, x2)

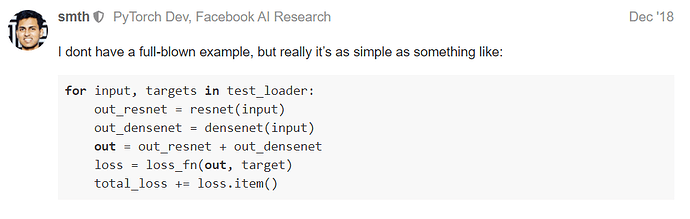

another answer:https://discuss.pytorch.org/t/how-to-train-an-ensemble-of-two-cnns-and-a-classifier/3026/8

one of is to use the torch.cat() . but another to use the “+”, which answer is more suitable for my classification.

this is part of my code.

if opt.model == 'VGG19':

net = VGG('VGG19')

elif opt.model == 'Resnet18':

net = ResNet18()

if opt.resume:

# Load checkpoint.

print('==> Resuming from checkpoint..')

assert os.path.isdir(path), 'Error: no checkpoint directory found!'

checkpoint = torch.load(os.path.join(path,'PrivateTest_model.t7'))

net.load_state_dict(checkpoint['net'])

best_PublicTest_acc = checkpoint['best_PublicTest_acc']

best_PrivateTest_acc = checkpoint['best_PrivateTest_acc']

best_PrivateTest_acc_epoch = checkpoint['best_PublicTest_acc_epoch']

best_PrivateTest_acc_epoch = checkpoint['best_PrivateTest_acc_epoch']

start_epoch = checkpoint['best_PrivateTest_acc_epoch'] + 1

else:

print('==> Building model..')

if use_cuda:

net.cuda()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=opt.lr, momentum=0.9, weight_decay=5e-4)

# Training

def train(epoch):

print('\nEpoch: %d' % epoch)

global Train_acc

net.train()

train_loss = 0

correct = 0

total = 0

if epoch > learning_rate_decay_start and learning_rate_decay_start >= 0:

frac = (epoch - learning_rate_decay_start) // learning_rate_decay_every

decay_factor = learning_rate_decay_rate ** frac

current_lr = opt.lr * decay_factor

utils.set_lr(optimizer, current_lr) # set the decayed rate

else:

current_lr = opt.lr

print('learning_rate: %s' % str(current_lr))

for batch_idx, (inputs, targets) in enumerate(trainloader):

if use_cuda:

inputs, targets = inputs.cuda(), targets.cuda()

optimizer.zero_grad()

inputs, targets = Variable(inputs), Variable(targets)

outputs = net(inputs)

loss = criterion(outputs, targets)

loss.backward()

utils.clip_gradient(optimizer, 0.1)

optimizer.step()

train_loss += loss.data[0]

_, predicted = torch.max(outputs.data, 1)

total += targets.size(0)

correct += predicted.eq(targets.data).cpu().sum()

utils.progress_bar(batch_idx, len(trainloader), 'Loss: %.3f | Acc: %.3f%% (%d/%d)'

% (train_loss/(batch_idx+1), 100.*correct/total, correct, total))

Train_acc = 100.*correct/total

Please help me, thanks so much