I am trying to train a bi-directional LSTM. My input data is in shape [batch_size, 8(seq), 6], my output is in shape- [batch_size, 2] My code:

class MODEL(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, num_classes, device):

super(MODEL, self).__init__()

torch.manual_seed(0)

self.device = device

self.input_size = input_size

self.hidden_size = hidden_size

self.num_layers = num_layers

self.lstm = nn.LSTM(input_size, hidden_size, num_layers, batch_first=True, bidirectional=True).to(self.device)

#self.fc = nn.Linear(hidden_size*2, num_classes).to(self.device)

self.dropout = nn.Dropout(0.2)

self.activation = nn.Sigmoid()

def forward(self, x):

h0 = torch.zeros(self.num_layers*2, x.size(0), self.hidden_size).to(self.device)

c0 = torch.zeros(self.num_layers*2, x.size(0), self.hidden_size).to(self.device)

out, _ = self.lstm(x, (h0, c0))

out = out[:,-1,:]

out = self.activation(self.fc1(out))

return out

I am using Adam optimiser with 0.0015 LR and SmoothL1Loss. For more reference, I am trying to implement this paper - IONet - except their sequence length for data is 200.

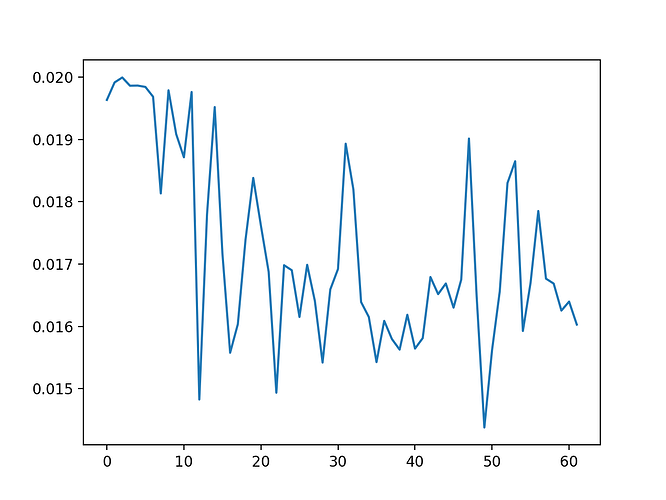

I have also tried using MSELoss for the purpose, but still the loss keeps fluctuating and does not decrease. I also referred to a previous raise issue, which sounds similar to mine(LSTM loss keeps fluctuating) , but it seems hasn’t been solved yet.

My loss function keeps fluctuating it initially decreases, but then it starts to increase. Any help is appreciated. Thanks.