Hi PyTorch Team,

I have an input tensor of shape (B, C_in, H, W), conv (C_in, C_out, K, K, Pad= K//2) and a mask of shape (B, C_in, H, W) . I wanted to multiply the intermediate 5D output with my mask before carrying out the final summation.

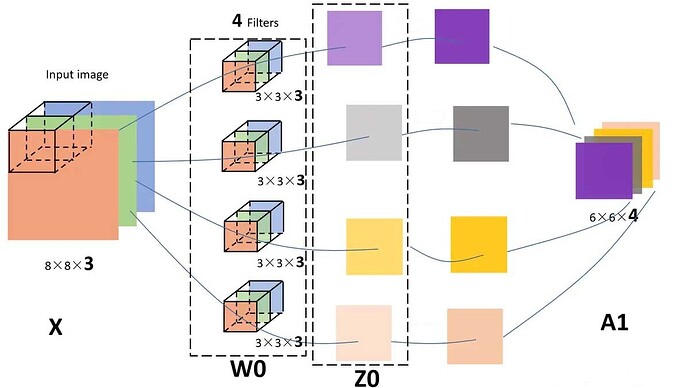

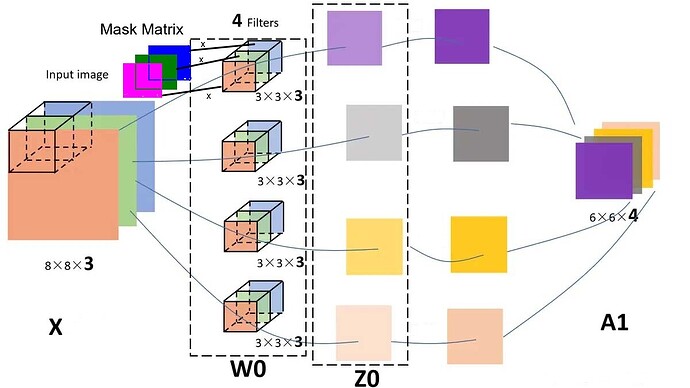

The pictures of the usual and convolution with masking is as follows:

The usual convolution operates as follows:

However, I want to mask out the intermediate 5D output with the mask matrix before the summation across the channels as shown in the following updated figure

Figures’ Courtesy: developpaper.com

I tried getting the intermediate 5D tensor first. However, I am not able to extract the intermediate 5D tensor of shape (B, C_out, C_in, H, W). Following are the things I tried:

in_channels = 5

conv = nn.Conv2d(in_channels, 20, 3, groups= in_channels, padding= 1)

x = torch.randn(1, in_channels, 64, 64)

output = conv(x)

print(output.shape)

# torch.Size([1, 20, 64, 64])

- I also tried unfolding it

inp = torch.randn(1, in_channels, 10, 12)

w = torch.randn(2, in_channels, 3, 3)

inp_unf = torch.nn.functional.unfold(inp, (3, 3))

print(inp_unf.shape)

out_unf = inp_unf.transpose(1, 2).matmul(w.view(w.size(0), -1).t()).transpose(1, 2)

print(out_unf.shape)

out = out_unf.view(1, 2, 8, 10)

# torch.Size([1, 2, 80])

Since I could not find any working solution on the forums, I decided to post here. It would be great if you could answer this question. In case it is a duplicate post, would you mind pointing me to the correct answer.