Hi,

I would like to use MultiheadAttention as self-attention after applying LSTM on a single sequence.

import torch

# shape: (sequence length, batch size, embedding dimension)

inp = torch.randn(5, 3, 10)

lstm = torch.nn.LSTM(input_size=10, hidden_size=10, num_layers=2)

self_attn = torch.nn.MultiheadAttention(embed_dim=10, num_heads=2)

x, _ = lstm(input=inp)

# x is query, key, value at the same time

out, weight = self_attn(query=x, key=x, value=x)

out

tensor([[[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192],

[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242],

[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273]],

[[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192],

[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242],

[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273]],

[[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192],

[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242],

[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273]],

[[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192],

[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242],

[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273]],

[[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192],

[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242],

[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273]]], grad_fn=<AddBackward0>)

# shape: (batch size, sequence length, embedding dimension)

out.transpose(0, 1)

tensor([[[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192],

[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192],

[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192],

[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192],

[ 0.0137, 0.0457, -0.0169, -0.0393, -0.0214, 0.0162, 0.0534,

0.0202, 0.0519, -0.0192]],

[[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242],

[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242],

[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242],

[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242],

[ 0.0116, 0.0359, -0.0044, -0.0320, -0.0294, 0.0175, 0.0573,

0.0373, 0.0316, -0.0242]],

[[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273],

[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273],

[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273],

[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273],

[ 0.0128, 0.0410, -0.0083, -0.0347, -0.0234, 0.0136, 0.0642,

0.0148, 0.0425, -0.0273]]], grad_fn=<TransposeBackward0>)

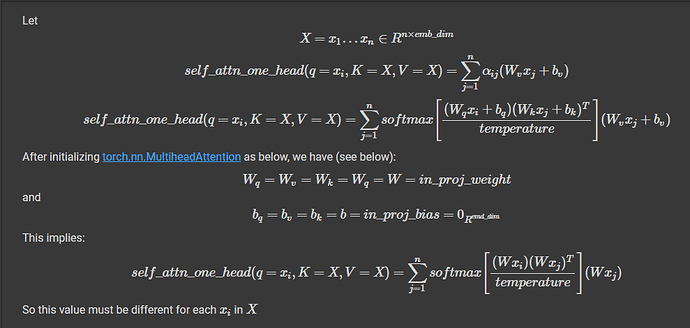

As we can see, every token in the input sequences has the same attention output. That turns out no token was actually attended to.

I have tried using attn_mask and key_padding_mask but it’s no use.

Can someone explain what happened? And please correct me if I am applying them the wrong way.

Thanks!