I used nn.MultiHeadAttention like below and output attn_output_weights during both training and evaluation:

class New_attention(nn.Module):

def init(self,num_heads):

super(New_attention, self).init()

self.multi_attn = nn.MultiheadAttention(3000, 5,dropout = 0.3, batch_first = True)

def forward(self, q, k, v):

attn_output, attn_output_weights = self.multi_attn(q, k, v)

print(attn_output_weights)

return attn_output, attn_output_weights

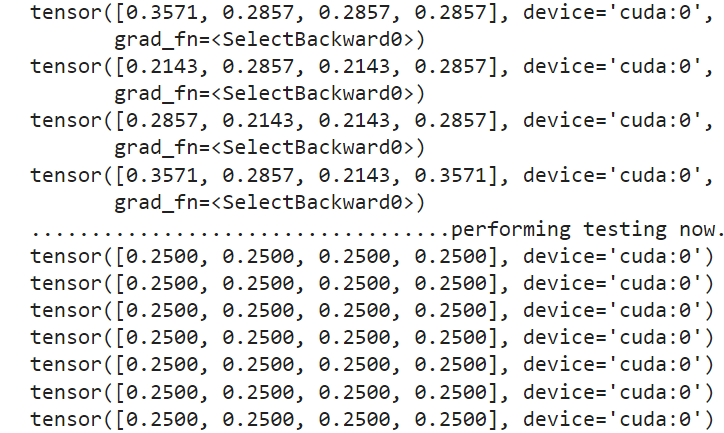

The weights look good during training mode. However, when I perform evaluation with model.eval() and with torch.no_grad(), the value in tensor become the same:

size of q is (128,1,3000)

size of k is (128, 4,3000)

size of v is (128,4, 3000)

Does anybody know why?