@imesery: referring to how big the data is - I now increased the batch size to 128 (224x224x3 images), and I see GPU memory usage, but still utilization of only ~1% (besides when initializing the model there was a ~1sec peak). is that normal? shouldn’t I use the GPU compute resources to actually do the model calculations? is it possible that 1% is simply what it needs for that?

https://devblogs.microsoft.com/directx/gpus-in-the-task-manager/

It is normal, see this post for more details.

>>> import torch

>>> a = torch.rand(20000,20000).cuda()

>>> while True:

... a += 1

... a -= 1

By running this code, I can see 100% utilization in nvidia-smi but nearly 1% for Task Manager. So it should be okay.

I see… thank you very much!

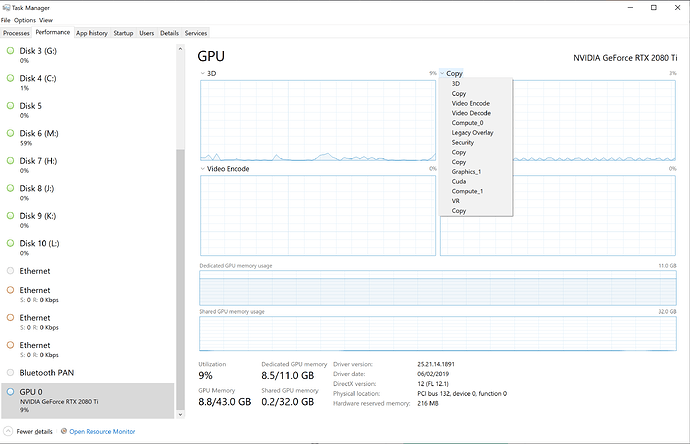

Hi there mate! I notice that in the screenie you are clearly not looking for CUDA utilization! Please note the tiny arrow pointing down next to 3D (and Copy, Video Encode & Decode). You might want to pick CUDA if you wish to see CUDA activity clicking on the tiny arrow and then looking somewhere near the bottom of the list. I can assure you that if you train a deep network you will in fact be see CUDA activity spikes!

Based on what I read here, there is a simple solution for this problem. As you can see in task manager, there are 4 options in gpu page:

3D, Copy, Video Encode, Video Decode.

Just click of one of them(i.e. Copy) and change it to Cuda.

That’s all.

Did you get python to use the cuda from the GPU nvidea 980 ?

If you can’t see CUDA in dropdown menu, read this post.