I use try-catch to enclose the forward and backward functions, and I also delete all the tensors after every batch.

try:

decoder_output, loss = model(batch)

if Q.qsize() > 0:

break

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss.append(loss.mean().item())

del decoder_output, loss

except Exception as e:

optimizer.zero_grad()

for p in model.parameters():

if p.grad is not None:

del p.grad

torch.cuda.empty_cache()

oom += 1

del batch

for p in model.parameters():

if p.grad is not None:

del p.grad

torch.cuda.empty_cache()

The training process is normal at the first thousands of steps, even if it got OOM exception, the exception will be catched and the GPU memory will be released.

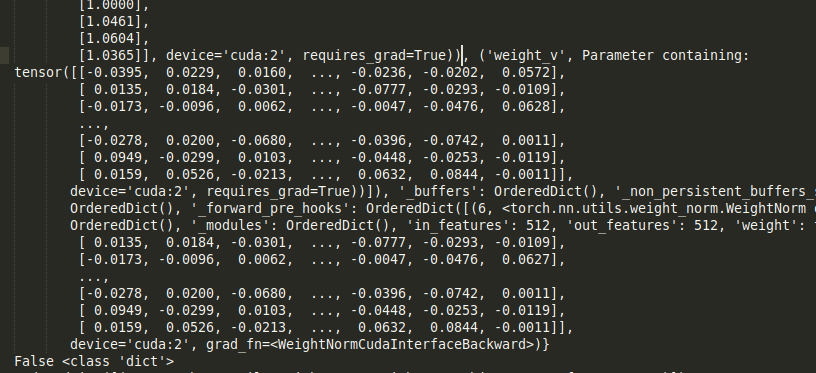

But after I trained thousands of batches, it suddenly keeps getting OOM for every batch and the memory seems never be released anymore.

It’s so weird to me, is there any suggestions? (I’m using distributed data parallel)