Hi, can someone please help me by explaining how to correctly pass minibatchs of sequential data through a bidirectional rnn? And perhaps show an example, if possible?

I will try to provide some context for my problem:

The problem is similar to a language modeling task. I’m trying to predict the next item for each item in a sequence.

In my case the input data to the model is a minibatch of N sentences with varying length. Each sentence consist of word indices representing a word in the vocabulary:

sents = [[4, 545, 23, 1], [34, 84], [23, 6, 774]]

The sentences in the dataset are randomly shuffled before creating minibatches.

Here is how the minibatches are created:

def batches(data, batch_size):

""" Yields batches of sentences from 'data', ordered on length. """

random.shuffle(data)

for i in range(0, len(data), batch_size):

sentences = data[i:i + batch_size]

sentences.sort(key=lambda l: len(l), reverse=True)

yield [torch.LongTensor(s) for s in sentences]

The model predicts the next element in the sentence. So the input and target looks like this:

input_sentence = [1, 4, 5, 7]

target_sentence = [4, 5, 7, 9]

Packed sequences are used in order to handle sentences of varying length Here is how the input and target are created:

x = nn.utils.rnn.pack_sequence([s[:-1] for s in sents])

y = nn.utils.rnn.pack_sequence([s[1:] for s in sents])

This input x, consisting of a minibatch of sentences, is then sent through the forward pass of the model:

out = model(x)

The model itself:

import torch

import torch.nn as nn

import torch.nn.functional as F

class Model(nn.Module):

""" A language model RNN with GRU layer(s). """

def __init__(self, vocab_size, embedding_dim, hidden_dim, gru_layers, dropout):

super(Model, self).__init__()

self.embedding = nn.Embedding(vocab_size, embedding_dim)

self.recurrent_layer = nn.GRU(input_size=embedding_dim, hidden_size=hidden_dim, num_layers=gru_layers, dropout=dropout, bidirectional=True)

self.fc1 = nn.Linear(hidden_dim*2, vocab_size)

def forward(self, packed_sents):

""" Takes a PackedSequence of sentences tokens that has T tokens

belonging to vocabulary V. Outputs predicted log-probabilities

for the token following the one that's input in a tensor shaped

(T, |V|).

"""

embedded_sents = nn.utils.rnn.PackedSequence(self.embedding(packed_sents.data), packed_sents.batch_sizes)

out_packed_sequence, hidden = self.recurrent_layer(embedded_sents)

out = self.fc1(out_packed_sequence.data)

return F.log_softmax(out, dim=1)

The output of the model is a probability distribution over the unique items in the dataset, representing how likely they are to represent the next item in the sequence for each item in the sequence.

Around 100 000 sequences are used for training the model, and 100 000 are used for testing the model.

The model is evaluated using top-k accuracy/precision@K:

def topk_accuracy(output_distribution, targets, k):

_, pred = torch.topk(input=output_distribution, k=k, dim=1)

pred = pred.t()

correct = pred.eq(targets.expand_as(pred))

return correct.sum().item() / targets.shape[0]

I have evaluated the model by using different variations of the recurrent layer. That is GRU, LSTM, bidirectional GRU and Bidirectional LSTM.

The code for training the model:

https://github.com/aksellk/recsys_telenor/blob/master/prediction/main.py

The model itself:

https://github.com/aksellk/recsys_telenor/blob/master/prediction/model.py

The code for evaluating the model:

https://github.com/aksellk/recsys_telenor/blob/master/prediction/testing.py

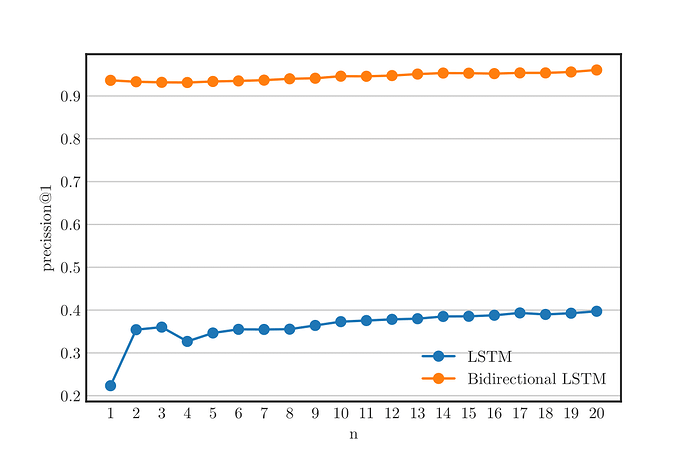

When bidirectional GRU/LSTM is used, the top1-accuracy is around 94%

When plain GRU/LSTM is used, the top1-accuracy is around 37%

I suspect something in my experiments is wrong because the bidirectional model achieves too good results compared to the plain versions of GRU/LSTM.

I have also made an experiment where I calculate the average precision@1 for each item at specific positions in the sessions. For example, the average precision@1 for the first item in the session over all sessions (Then for the second position, the third, and so on). The idea is to investigate how the prediction of the model changes throughout the sessions.

The code for this experiment is in a jupyter notebook:

https://github.com/aksellk/recsys_telenor/blob/master/prediction/average_precision_for_the_nth_interaction.ipynb

Here is a plot from this experiment showing the top1-accuracy of the bidirectional LSTM model and the plain LSTM model. The x-axis represents the position of the items in the sequences:

I suspect there is something wrong with the experiment because the bidirectional model shows a top1-accuracy over 90% for each item in the sequence, which I believe is too promising.

Further, the top-1 accuracy for the first items in each sequence should be affected by the cold-start problem. For the plain LSTM, we see that the average top1-accuarcy for the first item is much lower compared to the rest of the items. But this is not the case for the bidirectional problem, it looks like it is too high.

So to sum up, I believe there is something wrong in how I pass sequences in minibatches through the bidirectional model and therefore ask for help for understanding how to do this properly. And possibly any ideas as to why the bidirectional variation of the model shows so high topK-accuarcy.

This question is related to a previously asked question on the forum:

https://discuss.pytorch.org/t/handling-the-hidden-state-with-minibatches-in-a-rnn-for-language-modelling/44261/4

If something is unclear, please feel free to ask me.

Any help is very much appreciated.

Thanks!