I have a Python script which is running a PyTorch model on a specific device, passed by using the .to() function and then the integer number of the device. I have four devices available so I alternate between 0, 1, 2, 3. This integer is set to a variable args.device_id that I use around my code.

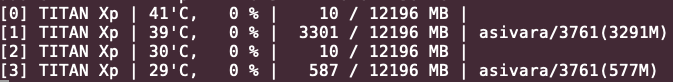

I’m getting this situation where I see some phantom data appearing on a GPU device which I didn’t specify ever. See the picture below (this output is from gpustat which is a nvidia-smi wrapper):

Note that when I force-quit my script, all the GPUs return to zero usage. So there are no other programs running from other users.

I specifically added this snippet to my main training/test loop, based on a previously-found PyTorch Discuss thread regarding finding-all-tensors. gc here is the Python garbage collection built-in module.

...

for obj in gc.get_objects():

try:

if (torch.is_tensor(obj) or

(hasattr(obj, 'data') and torch.is_tensor(obj.data))):

print(type(obj), obj.size(), obj.device)

except:

pass

...

Here is the result that I see on the command prompt:

2020-04-17 20:56:06 [INFO] Process ID: 3761, Using GPU device 1...

<class 'torch.Tensor'> torch.Size([1000, 63, 513]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 15872]) cuda:1

<class 'torch.Tensor'> torch.Size([51920]) cuda:1

<class 'torch.Tensor'> torch.Size([51920]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 51920]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 51920]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 51920]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 513, 203, 2]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 203, 513]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 513, 203, 2]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 203, 513]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 203, 513, 2]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 203, 513]) cuda:1

<class 'torch.Tensor'> torch.Size([1, 203, 513]) cuda:1

<class 'torch.Tensor'> torch.Size([]) cpu

<class 'torch.nn.parameter.Parameter'> torch.Size([2048]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048, 512]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048, 512]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048, 512]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048, 512]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048, 512]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([2048, 513]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([513, 512]) cuda:1

<class 'torch.nn.parameter.Parameter'> torch.Size([513]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 16000]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 16000]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 16000]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 63, 513]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 63, 513]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 63, 513]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 63, 513, 2]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 63, 513]) cuda:1

<class 'torch.Tensor'> torch.Size([1000]) cuda:1

<class 'torch.Tensor'> torch.Size([1000]) cuda:1

<class 'torch.Tensor'> torch.Size([1000, 2]) cuda:1

So everything on this list (except for one random outlier) all say “cuda:1”. How can I identify what data is sitting in “cuda:3” then? Appreciate the help. ![]()

Another tid-bit, the phantom data always builds up to 577MiB exactly, never more than that. This behavior occurs even if I set the classic os.environ['CUDA_VISIBLE_DEVICES'] variable at the top of the script.