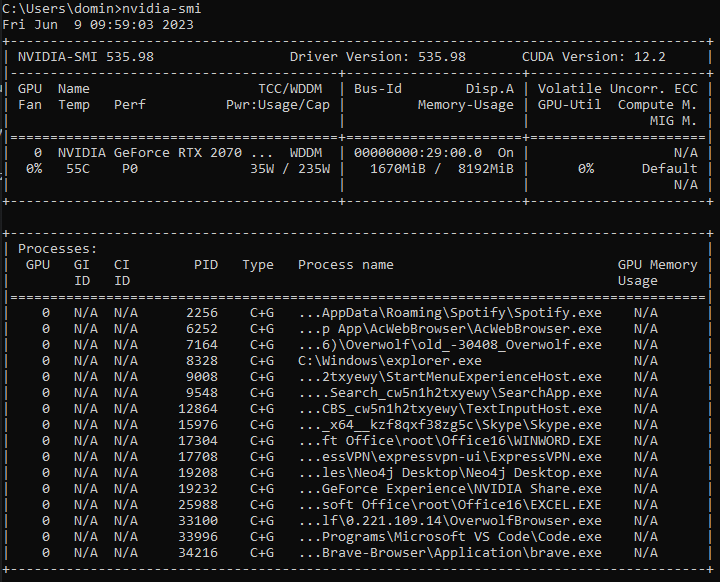

The nightly for pytorch-cuda 12.1 has been released, I just updated my graphics card to cuda 12.2, will the 12.1 nightly work?

CUDA 12.2 was not released yet.

That’s the driver. And yes, it will work.

Sorry about mixing up the driver. I tried to install pytorch-cuda 12.1 but it requires python 3.1 apparently? I know this is probably a stupid question, but is that correct or am I doing something wrong?

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> conda install pytorch-cuda=12.1 -c pytorch-nightly -c nvidia

Collecting package metadata (current_repodata.json): done

Solving environment: failed with initial frozen solve. Retrying with flexible solve.

Solving environment: failed with repodata from current_repodata.json, will retry with next repodata source.

ResolvePackageNotFound:

- python=3.1

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> conda list python

# packages in environment at C:\Users\domin\anaconda3\envs\local-gpt:

#

# Name Version Build Channel

llama-cpp-python 0.1.48 pypi_0 pypi

python 3.11.3 h966fe2a_0

python-dateutil 2.8.2 pypi_0 pypi

python-dotenv 1.0.0 pypi_0 pypi

No worries, I was just wondering where this CUDA (toolkit) version came from.

No, Python 3.1 is dead (or was never a thing?).

I don’t have a Windows system handy to test the install command, but you could try to manually download and install this binary:

win-64/pytorch-2.1.0.dev20230608-py3.11_cuda12.1_cudnn8_0.tar.bz2

Your Python 3.11 environment should be supported (also on Windows) according to this tracking issue.

I’m trying to install the new version of pytorch in a project that already has a version of torch installed:

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> conda list torch

# packages in environment at C:\Users\domin\anaconda3\envs\local-gpt:

#

# Name Version Build Channel

torch 2.0.1 pypi_0 pypi

torchvision 0.15.2 pypi_0 pypi

When I try to remove torch however, I get:

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> conda remove torch

Collecting package metadata (repodata.json): done

Solving environment: failed

PackagesNotFoundError: The following packages are missing from the target environment:

- torch

I tried to install the cuda 12.1 version without removing the existing install first, but when I ran it, it looked in the existing 2.0.1 install for a cuda dll and failed because it couldn’t find it. Do you know how I can remove the existing installation given conda remove doesn’t appear to be working?

Edit: Nvm, pip uninstall worked

Edit 2: I’m still getting the same error now with the new pytorch-cuda installed though:

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\__init__.py", line 140, in <module>

raise err

OSError: [WinError 126] The specified module could not be found. Error loading "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\lib\c10_cuda.dll" or one of its dependencies.

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> conda list torch

# packages in environment at C:\Users\domin\anaconda3\envs\local-gpt:

#

# Name Version Build Channel

pytorch 2.1.0.dev20230608 py3.11_cuda12.1_cudnn8_0 <unknown>

torchvision 0.15.2 pypi_0 pypi

You would have to use conda uninstall pytorch (instead of torch).

Do you know what could have caused the error in the Edit above, or in this case?

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\__init__.py", line 140, in <module>

raise err

OSError: [WinError 126] The specified module could not be found. Error loading "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\lib\nvfuser_codegen.dll" or one of its dependencies.

The only version of pytorch now installed is the cuda 12.1 version.

No, I don’t know what might be causing this issue. Could you post the install command you were using so that I can search for a Windows machine to reproduce it?

Certainly, the install command I used and the output produced was:

(local-gpt) PS C:\users\domin\downloads> conda install --use-local pytorch-2.1.0.dev20230608-py3.11_cuda12.1_cudnn8_0.tar.bz2

Downloading and Extracting Packages

############################################################################################################################################################################################################################### | 100%

Preparing transaction: done

Verifying transaction: done

Executing transaction: done

Were you never able to install it from conda directly, only from a local binary?

That’s what I tried initially, which gave the “Torch not compiled with CUDA enabled” error.

I ran

conda install pytorch

Which gave the installed version

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> conda list torch

# packages in environment at C:\Users\domin\anaconda3\envs\local-gpt:

#

# Name Version Build Channel

pytorch 2.0.1 py3.11_cpu_0 pytorch

pytorch-mutex 1.0 cpu pytorch

torchvision 0.15.2 pypi_0 pypi

Running with this gave the following stacktrace:

Traceback (most recent call last):

File "c:\Users\domin\Documents\Projects\Python\LocalGPT\ingest.py", line 94, in <module>

main()

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\click\core.py", line 1130, in __call__

return self.main(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\click\core.py", line 1055, in main

rv = self.invoke(ctx)

^^^^^^^^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\click\core.py", line 1404, in invoke

return ctx.invoke(self.callback, **ctx.params)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\click\core.py", line 760, in invoke

return __callback(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "c:\Users\domin\Documents\Projects\Python\LocalGPT\ingest.py", line 83, in main

db = Chroma.from_documents(

^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\langchain\vectorstores\chroma.py", line 446, in from_documents

return cls.from_texts(

^^^^^^^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\langchain\vectorstores\chroma.py", line 414, in from_texts

chroma_collection.add_texts(texts=texts, metadatas=metadatas, ids=ids)

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\langchain\vectorstores\chroma.py", line 159, in add_texts

embeddings = self._embedding_function.embed_documents(list(texts))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\langchain\embeddings\huggingface.py", line 158, in embed_documents

embeddings = self.client.encode(instruction_pairs, **self.encode_kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\InstructorEmbedding\instructor.py", line 521, in encode

self.to(device)

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\nn\modules\module.py", line 1145, in to

return self._apply(convert)

^^^^^^^^^^^^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\nn\modules\module.py", line 797, in _apply

module._apply(fn)

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\nn\modules\module.py", line 797, in _apply

module._apply(fn)

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\nn\modules\module.py", line 797, in _apply

module._apply(fn)

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\nn\modules\module.py", line 820, in _apply

param_applied = fn(param)

^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\nn\modules\module.py", line 1143, in convert

return t.to(device, dtype if t.is_floating_point() or t.is_complex() else None, non_blocking)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "C:\Users\domin\anaconda3\envs\local-gpt\Lib\site-packages\torch\cuda\__init__.py", line 239, in _lazy_init

raise AssertionError("Torch not compiled with CUDA enabled")

AssertionError: Torch not compiled with CUDA enabled

I also tried to directly install a build with cuda support from both anaconda and pip, but neither worked. I found the build by running conda search pytorch:

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> conda install pytorch=2.0.1=py3.11_cuda11.8_cudnn8_0

Collecting package metadata (current_repodata.json): done

Solving environment: failed with initial frozen solve. Retrying with flexible solve.

Solving environment: failed with repodata from current_repodata.json, will retry with next repodata source.

ResolvePackageNotFound:

- python=3.1

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> pip install pytorch==2.0.1+py3.11_cuda11.8_cudnn8_0

ERROR: Could not find a version that satisfies the requirement pytorch==2.0.1+py3.11_cuda11.8_cudnn8_0 (from versions: 0.1.2, 1.0.2)

ERROR: No matching distribution found for pytorch==2.0.1+py3.11_cuda11.8_cudnn8_0

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> pip install torch==2.0.1+py3.11_cuda11.8_cudnn8_0

ERROR: Could not find a version that satisfies the requirement torch==2.0.1+py3.11_cuda11.8_cudnn8_0 (from versions: 2.0.0, 2.0.1)

ERROR: No matching distribution found for torch==2.0.1+py3.11_cuda11.8_cudnn8_0

I have the same problem. Tried to getting it to work for several hours now.

OSError: [WinError 127] The specified procedure could not be found. Error loading "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\torch\lib\nvfuser_codegen.dll" or one of its dependencies.

-

nvfuser_codegenexist in the proper folder. - I tried installing with both conda and pip3. I couldn’t get

torch.cuda.is_available()to work with conda, but it worked with pip3. But then the other error pops up. I tried different variations and have to stop for now. - CUDA version is 12.1.

List

conda list torch

# packages in environment at C:\Users\S\anaconda3\envs\machine_learning:

#

# Name Version Build Channel

pytorch-cuda 12.1 hde6ce7c_5 pytorch-nightly

torch 2.1.0.dev20230610+cu121 pypi_0 pypi

torchaudio 2.1.0.dev20230610+cu121 pypi_0 pypi

torchvision 0.16.0.dev20230611+cu121 pypi_0 pypi

Trace

python -m spacy init fill-config base_config.cfg config.cfg

Traceback (most recent call last):

File "C:\Users\S\anaconda3\envs\machine_learning\lib\runpy.py", line 187, in _run_module_as_main

mod_name, mod_spec, code = _get_module_details(mod_name, _Error)

File "C:\Users\S\anaconda3\envs\machine_learning\lib\runpy.py", line 146, in _get_module_details

return _get_module_details(pkg_main_name, error)

File "C:\Users\S\anaconda3\envs\machine_learning\lib\runpy.py", line 110, in _get_module_details

__import__(pkg_name)

File "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\spacy\__init__.py", line 6, in <module>

from .errors import setup_default_warnings

File "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\spacy\errors.py", line 2, in <module>

from .compat import Literal

File "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\spacy\compat.py", line 3, in <module>

from thinc.util import copy_array

File "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\thinc\__init__.py", line 5, in <module>

from .config import registry

File "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\thinc\config.py", line 4, in <module>

from .types import Decorator

File "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\thinc\types.py", line 8, in <module>

from .compat import has_cupy, cupy

File "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\thinc\compat.py", line 30, in <module>

import torch.utils.dlpack

File "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\torch\__init__.py", line 129, in <module>

raise err

OSError: [WinError 127] The specified procedure could not be found. Error loading "C:\Users\S\anaconda3\envs\machine_learning\lib\site-packages\torch\lib\nvfuser_codegen.dll" or one of its dependencies.

Glad to see it’s not just me. I can’t install pytorch-cuda at all it seems. I either get the same python 3.1 error from conda, or pip can’t find it:

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> conda install pytorch-cuda=12.1 --channel pytorch-nightly

Collecting package metadata (current_repodata.json): done

Solving environment: failed with initial frozen solve. Retrying with flexible solve.

Solving environment: failed with repodata from current_repodata.json, will retry with next repodata source.

ResolvePackageNotFound:

- python=3.1

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> pip3 install pytorch-cuda==12.1

ERROR: Could not find a version that satisfies the requirement pytorch-cuda==12.1 (from versions: none)

ERROR: No matching distribution found for pytorch-cuda==12.1

Edit: This is despite the fact that conda knows it exists:

(local-gpt) PS C:\Users\domin\Documents\Projects\Python\LocalGPT> conda search pytorch-cuda --channel pytorch-nightly

Loading channels: done

# Name Version Build Channel

pytorch-cuda 11.6 h867d48c_0 pytorch

pytorch-cuda 11.6 h867d48c_1 pytorch

pytorch-cuda 11.6 h867d48c_2 pytorch-nightly

pytorch-cuda 11.6 h99f446c_3 pytorch-nightly

pytorch-cuda 11.7 h16d0643_5 pytorch-nightly

pytorch-cuda 11.7 h16d0643_5 pytorch

pytorch-cuda 11.7 h67b0de4_0 pytorch

pytorch-cuda 11.7 h67b0de4_1 pytorch

pytorch-cuda 11.7 h67b0de4_2 pytorch

pytorch-cuda 11.8 h24eeafa_3 pytorch

pytorch-cuda 11.8 h24eeafa_5 pytorch-nightly

pytorch-cuda 11.8 h24eeafa_5 pytorch

pytorch-cuda 11.8 h8dd9ede_2 pytorch

pytorch-cuda 12.1 hde6ce7c_5 pytorch-nightly

Hello,

I am using dell laptop and integrated graphics of Dell UHD Graphics 620 (Whiskey Lake). i can’t install pytorch with cuda enabled. In Nvidia website it shows that GPU’s like Tesla, Nvidia, Jetson, or GeForce only support for Nvidia driver installation with cuda enabled. so i can’t install pytorch with cuda enabled?

This is correct as CUDA does not support Intel’s integrated GPUs.

CC @atalman in case you have seen some Windows issues on conda recently.

HAHAHAHA… With a little help from this thread, I fixed it AssertionError: Torch not compiled with CUDA enabled · Issue #30664 · pytorch/pytorch · GitHub

Steps:

Run

pip uninstall torch torchvision

pip cache purge

pip install torch==2.0.1+cu118 torchvision -f https://download.pytorch.org/whl/torch_stable.html

I was then able to run ingest.py without issue!

Edit: This worked despite the fact that my installed cuda version is 12.1

Absolute magic, this worked like a charm. Thanks!

Hello.

Please use install instructions from Start Locally | PyTorch

If you want to install stable version (CUDA 11.7, 11.8) on windows something like this would work:

conda:

conda install pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidia

pipy:

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu117

Windows Nightly install, (CUDA 11.8, 12.1):

conda:

conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch-nightly -c nvidia

pipy:

pip3 install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cu121

Please note. Nightly builds are less stable then release version. Hence you may experience issues when installing nightlies. If you do see issues during nightly installation.

Please access following URL : PyTorch CI HUD <!-- --> to see our nightly build status. Green status means success, red failure.

Also if you are specifying valid install command as per get started page above but received an error, please go ahead and create an issue under pytorch/pytorch